Deployment of RHOSP 16.1 with for Virtualized Controllers

In this blog we demonstrate to deploy overcloud controllers as virtual machines in a Red Hat Virtualization cluster. This article might provide the audience with additional insights and tips that will help to succeed in deploying Red Hat OSP 16.1 with virtualized controller. We are building private cloud for “PoC” and documenting best practices for RHOSP 16.1 deployment gained through our experience. This will support deployment & engineering team. To my knowledge, this blog is perhaps the only detailed information source available in public domain till date, for deploying RHOSP 16.1 with Vcontrollers. Please feel free to reach out to us, in case you need any configuration support, or you have any clarification through comment section.

This blog is loaded with configuration lines and pictures. It is divided in 3 parts ; this is the first part where we cover Red Hat® enterprise virtualization deployment. In the second part I will continue with Openstack Undercloud and Overcloud deployment and in third part platform verification and VNF onboarding is covered.

Recently I was working on AMDOCS PCRF for one of our customers in North America. They were running PCRF workloads on RHEV platform and the Operator was planning to move these workloads onto Openstack compute nodes as the new release of PCRF mandated Openstack as underlying platform.

Wondering whether Openstack is competing RHEV platform ? Wait a moment, we have a scenario where RHEV complements Openstack !!!! Yes , they work in tandem. Let’s explore this.

Our deployment workflow is depicted in the below picture

We are keeping the setup very simple. In our set up we have 2 servers from vendor Quanta ACT and one HP DL 380 server for compute node. On one of the servers we have deployed KVM which is hosting 2 virtual machines DNS server and the RHEVM, the manager for RHEV. You can also consider deploying ESXI and host the VM’s. On our second server we are deploying RHEV host operating system on the baremetal. This RHEVH will run 3 virtualized controllers, Undercloud Director VM and an Ansible VM. You may consider running the controller VM’s on additional RHEV hosts (hypervisor) in the production environment for better redundancy . Red Hat Virtualization gives you a stable foundation for your virtualized OpenStack control plane.

Some of the benefits we achieve by virtualizing the control plane are listed below

- With multi hypervisor node RHV supports VM live migration, which can be used to relocate the OSP control plane VMs to a different hypervisor during their maintenance.

- Dynamic resource allocation to the virtualized controllers: scale up and scale down as required, including CPU and memory hot-add and hot-remove to prevent downtime and allow for increased capacity as the platform grows.

- Additional VM’s like VNF manager, NMS, EMS can share the same hypervisor minimizing the server footprint in the data center and making an efficient use of the physical nodes.

We are running 3 controllers as VM’s instead of running them on baremetal. They can cohabit with other work loads in the data center eco system. RHEV’s Virtual machine’s live migration and Virtual machine’s high availability eases maintenance of these nodes.

Previously virtualized OpenStack labs leveraged vmbc and pxe_ssh , which I believe is not a great solution and not suitable for carrier grade deployment. Vmbc/ pxe_ssh based virtualized OpenStack can be used as a temporary setup for migration and upgrade scenarios of openstack versions.

On physical nodes Baseboard management controllers are the physical chips that implement IPMI. Some of the IPMI implementations are Dell DRAC, HP integrated Lights-Out (iLO), IBM Remote Supervisor Adapter and key technologies like PXE, NBP ,IPMI, DHCP, TFTP, iSCSI are used for Bare metal provisioning.

With RHEV the staging-ovirt driver allows OpenStack to easily use ovirt/RHV virtual machines as overcloud nodes. Its like, IPMI calls are placed from Undercloud to RHEV-Manager.

Staging-ovirt manages the VMs hosted in RHEV like how ipmi, redfish, ilo, idrac drivers manage physical nodes using BMCs supported by Ironic, such as iRMC, iDrac or iLO. For RHV this is done by interacting with the RHV manager directly to trigger power management actions on the VMs.

Ironic Components and interaction with baremetal node or VM created on RHEV host

Components of RHEV The RHEV platform consists of the following components:

• Red Hat Enterprise Virtualization Manager (RHEV-M):

This is a centralized management console with a graphical, web-based interface that manages our complete virtualization infrastructure, such as hosts, storage, network, virtual machines, and more, running on the physical hardware. We are hosting this as Virtual machine on KVM.

• Red Hat Enterprise Virtualization Hypervisor (RHEV-H): RHEV hosts can be either based on full Red Hat Enterprise Linux systems with KVM enabled (also called Red Hat Enterprise Linux Virtualization Hosts) or on purpose-built RHEV-H hosts. RHEV-H is a bare metal, image-based, small- hypervisor with minimized security footprint, also referred to as Red Hat Enterprise Virtualization Hypervisor.

• Virtual Desktop and Server Management Daemon (VDSM): This runs as the VDSM service on the RHEV hypervisor host that facilitates the communication between RHEV-M and the hypervisor host. It uses the libvirt and QEMU service for the management and monitoring of virtual machines and other resources such as hosts, networking, storage, and so on.

• Storage domains: This is used to store virtual machine images, snapshots, templates, and ISO disk images in order to spin up virtual machines.

• Logical networking: This defines virtual networking for guest data, storage access, and management and displays network that accesses the virtual machine consoles.

• Database platform: This is used to store information about the state of virtualization environment.

• SPICE: This is an open remote computing protocol that provides client access to remote virtual machine display and devices (keyboard, mouse, and audio). VNC can also be used to get remote console access.

• API support: RHEV supports the REST API, Python SDK, and Java Software Development Kit, which allow users to perform complete automation of managing virtualization infrastructure outside of a standard web interface of manager using own programs or custom scripts. Users can also use command-line shell utility to interact with RHEV-M outside of the standard web interface in order to manage your virtual infrastructure.

• Admin/user portal: This is used for initial setup, configuration, and management. There is a power user portal, which is a trimmed-down version of the administration portal that is tailored for the end user’s self-provisioning of virtual machines

RHOSP consists of :

- Undercloud

- Overcloud

The primary objectives of undercloud are as below:

- Discover the bare-metal servers on which the deployment of Openstack Platform has been deployed

- Serve as the deployment manager for the software to be deployed on these nodes

- Define complex network topology and configuration for the deployment

- Rollout of software updates and configurations to the deployed nodes

- Reconfigure an existing undercloud deployed environment

- Enable high availability support for the openstack nodes

Objectives of overcloud are as below:

- The overcloud is the resulting Red Hat OpenStack Platform environment created using the undercloud.

- This includes different nodes roles which you define based on the OpenStack Platform environment you aim to create.

Here is our flow of deploying RHOSP:

- Bring up a physical host and performing network configuration

- Deploy KVM and RHEV manager and RHEV host and create VM’s

- Install a new DNS and RHEVM on KVM

- Install undercloud-director virtual machines on RHEV host

- Configure hostname and subscribe to RHN for the director

- Install python-tripleoclient

- Configure undercloud.conf

- Install Undercloud

- Obtain and upload images for overcloud introspection and deployment

- Create virtual machines for overcloud nodes (compute and controller)

- Configure Virtual Bare Metal Controller

- Importing and registering the overcloud nodes

- Introspecting the overcloud nodes

- Tagging overcloud nodes to profiles

IP Address Plan

| Subnet | Network type | VLAN ID |

| 10.205.6.0/24 | Internal API | 206 |

| 10.205.7.0/24 | Tenant Network | 207 |

| 10.205.8.0/24 | External | 208 |

| 10.200.11.0 | Management & ILO,IDRAC | 1 |

| 10.205.5.0 | Control Plane | 222 |

| 10.205.10.0/24 | Storage Management | 905 |

| 10.205.9.0/24 | Storage | 207 |

| VM/ Server/Device | IP Address | Comments |

| KVM(Management) | 10.200.11.10 | |

| RHEVH(IMPI) | 10.200.10.31 | |

| Compute1(IDRAC) | 10.200.10.7 | |

| vController1(CTLPLANE) | 10.200.5.37 | Control Plane |

| vController2(CTLPLANE) | 10.200.5.36 | Control Plane |

| vController3(CTLPLANE) | 10.200.5.27 | Control Plane |

| Compute(CTLPLANE) | 10.200.5.34 | Control Plane |

| Undercloud(Management) | 10.205.21.23 | |

| RHEVM | 10.200.11.30 | |

| RHEVH | 10.205.21.5 | |

| Router Manage | 10.200.11.6 | |

| DNS Server | 10.200.11.172 | |

| NTP Server | 10.200.11.251 |

We are using 2 interfaces of physical servers. One interface is dedicated for control plane traffic between Controller, Compute and Undercloud nodes. The other interface (tagged) carries Internal API, Tenant Network, External, Storage , and Management Storage.

Our Hardware servers (Quanta act and Dell ), LAN Brocade switch

In our setup we have 2 ACT servers one running KVM one which we have host RHEVM and DNS. We are running Compute node on a bare metal server.

Before you start the installation ensure that you have created correct VLANS, configured routing& switching, properly connected the ethernet cables to switch port and verify the layer 2 and layer 3 connectivity. I have enabled LLDP protocol on the switch which exchanges messages with the Openstack; and the discovered nodes can be seen on router and the nodes with respective commands mentioned in the blog.

Let us start the deployment process.

RHEVM INSTALLING THE RED HAT VIRTUALIZATION MANAGER

Register your system with the Content Delivery Network, entering your Customer Portal user name and password when prompted: # subscription-manager register

[root@rhevm ~]# subscription-manager register Registering to: subscription.rhsm.redhat.com:443/subscription Username: ranjeet_badhe_redhat Password:xxxxxxxxx The system has been registered with ID: 5fe1b527-3586-4749-8f39-150259fe09ab The registered system name is: rhevm [root@rhevm ~]# subscription-manager list --available --all --matches="Red Hat Virtualization manager" +-------------------------------------------+ Available Subscriptions +-------------------------------------------+ Subscription Name: Red Hat Virtualization Manager Provides: Red Hat Beta Red Hat Enterprise Linux Server Red Hat CodeReady Linux Builder for x86_64 Red Hat Enterprise Linux for x86_64 Red Hat Virtualization Manager Red Hat Ansible Engine Red Hat Virtualization Host - Extended Update Support Red Hat Virtualization - Extended Update Support SKU: RV00045 Contract: Pool ID: 8a851567acf294b0171qwen456420xx Provides Management: No Available: 9 Suggested: 1 Service Type: L1-L3 Red Hat Virtualization - Extended Update Support Red Hat Virtualization Red Hat Enterprise Linux Advanced Virtualization Beta Red Hat Beta Red Hat Virtualization Manager Red Hat EUCJP Support (for RHEL Server) - Extended Update Support Red Hat Enterprise Linux Load Balancer (for RHEL Server) - Extended Update Support dotNET on RHEL (for RHEL Server) Red Hat CodeReady Linux Builder for x86_64 - Extended Update Output truncated for brevity [root@rhevm ~]# subscription-manager attach --pool=8a851567acf294b0171qwen456420xx Successfully attached a subscription for: Red Hat Virtualization, Standard Support (4 Sockets, NFR,) Enabling the Repository

[root@rhevm ~]# subscription-manager repos --disable='*' \ > --enable=rhel-8-for-x86_64-baseos-rpms \ > --enable=rhel-8-for-x86_64-appstream-rpms \ > --enable=rhv-4.4-manager-for-rhel-8-x86_64-rpms \ > --enable=fast-datapath-for-rhel-8-x86_64-rpms \ > --enable=jb-eap-7.4-for-rhel-8-x86_64-rpms \ > --enable=openstack-16.2-cinderlib-for-rhel-8-x86_64-rpms \ > --enable=rhceph-4-tools-for-rhel-8-x86_64-rpmsdnf upgrade –nobest Issue the following commands dnf module -y enable pki-deps dnf module -y enable postgresql:12 dnf distro -–nobest dnf upgrade –-nobest dnf install rhvm postgresql-setup –initdb engine-setup ,Binary answers [root@rhevm ~]# engine-setup [ INFO ] Stage: Initializing [ INFO ] Stage: Environment setup Configuration files: /etc/ovirt-engine-setup.conf.d/10-packaging-wsp.conf, /etc/ovirt-engine-setup.conf.d/10-packaging.conf Log file: /var/log/ovirt-engine/setup/ovirt-engine-setup-20220204171803-wh3o7f.log Version: otopi-1.9.6 (otopi-1.9.6-2.el8ev) [ INFO ] Stage: Environment packages setup [ INFO ] Stage: Programs detection [ INFO ] Stage: Environment setup (late) [ INFO ] Stage: Environment customization --== PRODUCT OPTIONS ==-- Configure Cinderlib integration (Currently in tech preview) (Yes, No) [No]: Configure Engine on this host (Yes, No) [Yes]: Configuring ovirt-provider-ovn also sets the Default cluster's default network provider to ovirt-provider-ovn. Non-Default clusters may be configured with an OVN after installation. Configure ovirt-provider-ovn (Yes, No) [Yes]: Configure WebSocket Proxy on this host (Yes, No) [Yes]: * Please note * : Data Warehouse is required for the engine. If you choose to not configure it on this host, you have to configure it on a remote host, and then configure the engine on this host so that it can access the database of the remote Data Warehouse host. Configure Data Warehouse on this host (Yes, No) [Yes]: Configure VM Console Proxy on this host (Yes, No) [Yes]: Configure Grafana on this host (Yes, No) [Yes]: --== PACKAGES ==-- --== DATABASE CONFIGURATION ==-- Where is the DWH database located? (Local, Remote) [Local]: Setup can configure the local postgresql server automatically for the DWH to run. This may conflict with existing applications. Would you like Setup to automatically configure postgresql and create DWH database, or prefer to perform that manually? (Automatic, Manual) [Automatic]: Where is the Engine database located? (Local, Remote) [Local]: Setup can configure the local postgresql server automatically for the engine to run. This may conflict with existing applications. Would you like Setup to automatically configure postgresql and create Engine database, or prefer to perform that manually? (Automatic, Manual) [Automatic]: --== OVIRT ENGINE CONFIGURATION ==-- Engine admin password: Confirm engine admin password: Application mode (Virt, Gluster, Both) [Both]: Use default credentials (admin@internal) for ovirt-provider-ovn (Yes, No) [Yes]: --== STORAGE CONFIGURATION ==-- Default SAN wipe after delete (Yes, No) [No]: --== PKI CONFIGURATION ==-- Organization name for certificate [itchundredlab.com]: --== APACHE CONFIGURATION ==-- Setup can configure the default page of the web server to present the application home page. This may conflict with existing applications. Do you wish to set the application as the default page of the web server? (Yes, No) [Yes]: Setup can configure apache to use SSL using a certificate issued from the internal CA. Do you wish Setup to configure that, or prefer to perform that manually? (Automatic, Manual) [Automatic]: --== SYSTEM CONFIGURATION ==-- --== MISC CONFIGURATION ==-- Please choose Data Warehouse sampling scale: (1) Basic (2) Full (1, 2)[1]: 1 --== END OF CONFIGURATION ==-- [ INFO ] Stage: Setup validation Review your configuration and confirm. --== CONFIGURATION PREVIEW ==-- Application mode : both Default SAN wipe after delete : False Host FQDN : rhevm.itchundredlab.com Firewall manager : firewalld Update Firewall : True Set up Cinderlib integration : False Configure local Engine database : True Set application as default page : True Configure Apache SSL : True Engine database host : localhost Engine database port : 5432 Engine database secured connection : False Engine database host name validation : False Engine database name : engine Engine database user name : engine Engine installation : True PKI organization : itchundredlab.com Set up ovirt-provider-ovn : True Grafana integration : True Grafana database user name : ovirt_engine_history_grafana Configure WebSocket Proxy : True DWH installation : True DWH database host : localhost DWH database port : 5432 DWH database secured connection : False DWH database host name validation : False DWH database name : ovirt_engine_history Configure local DWH database : True Configure VMConsole Proxy : True Please confirm installation settings (OK, Cancel) [OK]: Output truncated for brevity --== SUMMARY ==-- [ INFO ] Restarting httpd Please use the user 'admin@internal' and password specified in order to login Web access is enabled at: http://rhevm.itchundredlab.com:80/ovirt-engine https://rhevm.itchundredlab.com:443/ovirt-engine Internal CA 27:B7:88:14:41:4E:24:9E:F1:1C:C5:56:A7:E4:82:0F:6A:21:FB:7C SSH fingerprint: SHA256:B2EgK91FLcBIAYYel1Tvm/ivxHkEVGtOAtZMYMCwO1E Web access for grafana is enabled at: https://rhevm.itchundredlab.com/ovirt-engine-grafana/ Please run the following command on the engine machine rhevm.itchundredlab.com, for SSO to work: systemctl restart ovirt-engine

RHVH INSTALLING THE RED HAT VIRTUALIZATION HOST

Install the RHVH Iso on to BareMetal similar to Simple Linux installation and follow the steps post installation and booting the system.

subscription-manager register sudo subscription-manager list --available --all --matches=" Red Hat Virtualization Host " Enabling the Repository and start the VDSMD service subscription-manager attach --pool=pool_id systemctl enable vdsmd systemctl start vdsmd

Start/enable the ovirt-imageio service systemctl enable ovirt-imageio.service systemctl status ovirt-imageio.service

Accessing the Administration portal of RHEVM

Open the browser and enter the URL in FQDN format. Ensure that you make an entry in host file of the client and you have the entries in your DNS server.

To start we create datacenter, cluster ,storage domain and add host. Let us highlight what they are.

Data centers:

A data center is a logical entity that defines the set of physical and logical resources

used in a managed virtual environment. Think of it as a container that houses

clusters of hosts, virtual machines, storage, and networks.

Clusters:

A cluster is a group of physical hosts that acts as a resource pool for a set of virtual

machines created on it. You can create multiple clusters in a data center.

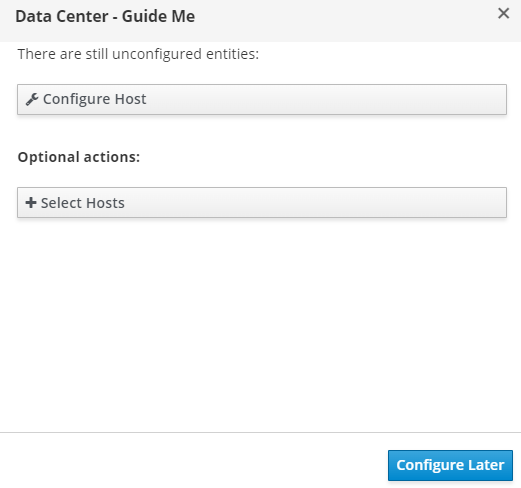

Click on Data center and follow the steps.

We have added the host wait for a while to complete the host addition process.

Host is added. We are adding the storage now. Putting host in maintenance mode.

In this step we configure the storage part.

Create a folder “localstorage”.

[root@rhvh1 ~]# mkdir /mnt/localstorage Check in fstab the partition space availability. [root@rhvh1 ~]# cat /etc/fstab # /etc/fstab # Created by anaconda on Thu Jan 6 17:12:35 2022 # Accessible filesystems, by reference, are maintained under '/dev/disk/'. # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info. # After editing this file, run 'systemctl daemon-reload' to update systemd # units generated from this file. /dev/rhvh/rhvh-4.4.9.3-0.20211206.0+1 / xfs defaults,discard 0 0 UUID=108ee036-8947-4489-84bf-ca40c088fa0f /boot xfs defaults 0 0 /dev/mapper/rhvh-home /home xfs defaults,discard 0 0 /dev/mapper/rhvh-tmp /tmp xfs defaults,discard 0 0 /dev/mapper/rhvh-var /var xfs defaults,discard 0 0 /dev/mapper/rhvh-var_crash /var/crash xfs defaults,discard 0 0 /dev/mapper/rhvh-var_log /var/log xfs defaults,discard 0 0 /dev/mapper/rhvh-var_log_audit /var/log/audit xfs defaults,discard 0 0 /dev/mapper/rhvh-swap none swap defaults 0 0 #BELOW LINE WAS ADDED TO CREATE PARTITION IN SPARE HARD DISK #/dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN /mnt/sdbnfs/ xfs defaults 0 0 10.205.21.5:/mnt/sdbnfs /mnt/sdbnfs nfs defaults 0 0 Mount the created folder. [root@rhvh1 ~]# mount /dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN /mnt/localstorage [root@rhvh1 ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 189G 0 189G 0% /dev tmpfs 189G 16K 189G 1% /dev/shm tmpfs 189G 19M 189G 1% /run tmpfs 189G 0 189G 0% /sys/fs/cgroup /dev/mapper/rhvh-rhvh--4.4.9.3--0.20211206.0+1 315G 156G 160G 50% / /dev/mapper/rhvh-home 1014M 40M 975M 4% /home /dev/sda2 1014M 442M 573M 44% /boot /dev/mapper/rhvh-tmp 1014M 40M 975M 4% /tmp /dev/mapper/rhvh-var 15G 249M 15G 2% /var /dev/mapper/rhvh-var_crash 10G 105M 9.9G 2% /var/crash /dev/mapper/rhvh-var_log 8.0G 420M 7.6G 6% /var/log /dev/mapper/rhvh-var_log_audit 2.0G 75M 2.0G 4% /var/log/audit tmpfs 38G 0 38G 0% /run/user/0 /dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN 447G 12G 436G 3% /mnt/localstorage

Format the partition

[root@rhvh1 ~]# mkfs.xfs -f /dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN meta-data=/dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN isize=512 agcount=4, agsize=29303222 blks = sectsz=4096 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=117212886, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=57232, version=2 = sectsz=4096 sunit=1 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 Discarding blocks...Done.

[root@rhvh1 ~]# mount /dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN /mnt/localstorage [root@rhvh1 ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 189G 0 189G 0% /dev tmpfs 189G 16K 189G 1% /dev/shm tmpfs 189G 19M 189G 1% /run tmpfs 189G 0 189G 0% /sys/fs/cgroup /dev/mapper/rhvh-rhvh--4.4.9.3--0.20211206.0+1 315G 156G 160G 50% / /dev/mapper/rhvh-home 1014M 40M 975M 4% /home /dev/sda2 1014M 442M 573M 44% /boot /dev/mapper/rhvh-tmp 1014M 40M 975M 4% /tmp /dev/mapper/rhvh-var 15G 249M 15G 2% /var /dev/mapper/rhvh-var_crash 10G 105M 9.9G 2% /var/crash /dev/mapper/rhvh-var_log 8.0G 422M 7.6G 6% /var/log /dev/mapper/rhvh-var_log_audit 2.0G 79M 2.0G 4% /var/log/audit tmpfs 38G 0 38G 0% /run/user/0 /dev/mapper/INTEL_SSDSC2KB480G8_BTYF830500DJ480BGN 447G 3.2G 444G 1% /mnt/localstorage

Let us configure local storage.

Click on configure local storage. Provide the path/ mnt/localstorage

Local storage is added under “Data Storage Domains”. We have removed the default Data Center and added Austin data center.

Creating Undercloud VM & Virtual Controller VM’s

Before we start creating Undercloud VM and the Skelton for controller node, we create logical networks and vNIC profiles. This is done through GUI . Steps are mentioned below.

Create Controller

Please note that the interface creation order must be same across all the Controller VM’s

Set the System options

Set the boot options

We have created the Skelton for the controller VM’s and Undercloud. Undercloud is installed with RHEL 8.2 and controllers will get the image from Undercloud.

Interface enp2s0 is a interface in control plane network. Undercloud intercepts the PXR/DHCP request coming out from Controller and compute nodes.

We define in undercloud the DHCP range and introspection range of ip address chunk . We will explore this in part-2 of your blog.

Our Router configlets

vlan 1 name DEFAULT-VLAN no untagged ethe 2/1 vlan 11 tagged ethe 2/1 router-interface ve 11 vlan 21 untagged ethe 2/4 ethe 2/15 to 2/16 ethe 2/19 to 2/20 router-interface ve 21 vlan 55 untagged ethe 2/8 router-interface ve 55 vlan 205 router-interface ve 205 vlan 206 tagged ethe 2/7 ethe 2/13 ethe 2/17 ethe 2/21 router-interface ve 206 vlan 207 tagged ethe 2/7 ethe 2/13 ethe 2/17 ethe 2/21 router-interface ve 207 vlan 208 tagged ethe 2/7 ethe 2/13 ethe 2/17 ethe 2/21 router-interface ve 208 vlan 209 tagged ethe 2/7 ethe 2/13 ethe 2/17 ethe 2/21 router-interface ve 209 vlan 805 interface ve 11 port-name "Mgmt-Nw" ip address 10.200.11.6/24 interface ve 21 port-name RHV-OSP ip address 10.205.21.254/24 interface ve 205 port-name CTR-PLANE ip address 10.205.1.254/24 interface ve 206 port-name Internal Api ip address 10.205.6.254/24 interface ve 207 port-name Tenant ip address 10.205.7.254/24 interface ve 208 port-name External ip address 10.205.8.254/24 interface ve 209 port-name Storage ip address 10.205.9.254/24

DNS Server Configuration

[root@dns ~]# cat /var/named/reverse.itchundredlab.com $TTL 1D @ IN SOA dns.itchundredlab.com. root.itchundredlab.com. ( 0 ; serial 1D ; refresh 1H ; retry 1W ; expire 3H ) ; minimum @ IN NS dns.itchundredlab.com. @ IN PTR itchundredlab.com. dns IN A 10.205.21.22 host IN A 10.205.21.22 22 IN PTR dns.itchundredlab.com. 21 IN PTR globalkvm.itchundredlab.com. 5 IN PTR rhvh1.itchundredlab.com. 30 IN PTR rhevm.itchundredlab.com. 23 IN PTR undercloud21.itchundredlab.com. 7 IN PTR undercloud21.itchundredlab.com. 20 IN PTR ansible.itchundredlab.com. [root@dns ~]# cat /var/named/forward.itchundredlab.com $TTL 1D @ IN SOA dns.itchundredlab.com. root.itchundredlab.com. ( 0 ; serial 1D ; refresh 1H ; retry 1W ; expire 3H ) ; minimum @ IN NS dns.itchundredlab.com. @ IN A 10.205.21.22 dns IN A 10.205.21.22 host IN A 10.205.21.22 globalkvm IN A 10.205.21.21 rhvh1 IN A 10.205.21.5 rhevm IN A 10.205.21.30 undercloud21 IN A 10.205.21.23 undercloud21 IN A 10.205.5.7 ansible IN A 10.205.21.20

End of Part1 . In next section we will RHOSP 16.1 , Under cloud and Overcloud deployment.

I thank Prahin and Sumit for the lab support.