In my previous blog http://bit.ly/4nwff22 , I explored how MACVLAN is implemented using Multus. Building on that foundation, let’s now dive deeper into the internals of Kubernetes networking.

Coming from a background in Packet/MPLS data networks and telecom-grade IP networking, my perspective naturally aligns with telco-style architectures. Over the years, I’ve transitioned into cloud-native technologies, and this blog reflects how I analyze Kubernetes networking through the lens of a telecom IP engineer.The cluster runs Kubernetes v1.33.4 with Calico CNI (default VXLAN mode).

Cluster State

[root@kubemaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubemaster.ranjeetbadhe.com Ready control-plane 43h v1.33.4

kubeworker1.ranjeetbadhe.com Ready mazdoor 24h v1.33.4

kubeworker2.ranjeetbadhe.com Ready mazdoor 25h v1.33.4

Storage Configuration

Storage is provisioned using an NFS client provisioner:

[root@kubemaster ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE AGE

nfs-client (default) cluster.local/nfs-client Delete Immediate 24h

Running a Pod with NFS PVC

Here’s a sample pod manifest (`netshoot2-nfs-pod-worker2.yaml`) bound to an NFS PVC. It runs on worker2 and uses custom DNS:

[root@kubemaster ~]# cat netshoot1-nfs-pod-worker1.yamlapiVersion: v1kind: PersistentVolumeClaimmetadata: name: netshoot-pvc namespace: defaultspec: accessModes: ["ReadWriteMany"] resources: requests: storage: 2Gi storageClassName: nfs-client---apiVersion: v1kind: Podmetadata: name: netshoot-nfs-pod1 namespace: defaultspec: # Force custom DNS (must set dnsPolicy: None) dnsPolicy: None dnsConfig: nameservers: - 8.8.8.8 - 192.168.0.1 # (optional) add your local search domains # searches: # - ranjeetbadhe.com # (optional) common DNS options: # options: # - name: ndots # value: "2" nodeSelector: kubernetes.io/hostname: kubeworker1.ranjeetbadhe.com containers: - name: netshoot image: nicolaka/netshoot:latest command: ["sleep", "infinity"] securityContext: privileged: true volumeMounts: - name: nfs-data mountPath: /mnt/nfs volumes: - name: nfs-data persistentVolumeClaim: claimName: netshoot-pvc

[root@kubemaster ~]# cat netshoot2-nfs-pod-worker2.yamlapiVersion: v1kind: PersistentVolumeClaimmetadata: name: netshoot-pvc namespace: defaultspec: accessModes: ["ReadWriteMany"] resources: requests: storage: 2Gi storageClassName: nfs-client---apiVersion: v1kind: Podmetadata: name: netshoot-nfs-pod2 namespace: defaultspec: # Force custom DNS (must set dnsPolicy: None) dnsPolicy: None dnsConfig: nameservers: - 8.8.8.8 - 192.168.0.1 # (optional) add your local search domains # searches: # - ranjeetbadhe.com # (optional) common DNS options: # options: # - name: ndots # value: "2" nodeSelector: kubernetes.io/hostname: kubeworker2.ranjeetbadhe.com containers: - name: netshoot image: nicolaka/netshoot:latest command: ["sleep", "infinity"] securityContext: privileged: true volumeMounts: - name: nfs-data mountPath: /mnt/nfs volumes: - name: nfs-data persistentVolumeClaim: claimName: netshoot-pvcapiVersion: v1

kind: PersistentVolumeClaimmetadata: name: netshoot-pvc namespace: defaultspec: accessModes: ["ReadWriteMany"] resources: requests: storage: 2Gi storageClassName: nfs-client---apiVersion: v1kind: Podmetadata: name: netshoot-nfs-pod2 namespace: defaultspec: dnsPolicy: None dnsConfig: nameservers: - 8.8.8.8 - 192.168.0.1 nodeSelector: kubernetes.io/hostname: kubeworker2.ranjeetbadhe.com containers: - name: netshoot image: nicolaka/netshoot:latest command: ["sleep", "infinity"] securityContext: privileged: true volumeMounts: - name: nfs-data mountPath: /mnt/nfs volumes: - name: nfs-data persistentVolumeClaim: claimName: netshoot-pvc “

Inside the Pods

~ # hostnamenetshoot-nfs-pod1

~ # mtr -r -c 10 10.244.50.143Start: 2025-09-11T13:26:43+0000HOST: netshoot-nfs-pod1 Loss% Snt Last Avg Best Wrst StDev 1.|-- 192.168.0.41 0.0% 10 0.1 0.1 0.1 0.1 0.0 2.|-- 192.168.0.42 0.0% 10 0.3 0.3 0.2 0.3 0.0 3.|-- 10.244.50.143 0.0% 10 0.3 0.3 0.2 0.3 0.0

~ # traceroute 10.244.50.143traceroute to 10.244.50.143 (10.244.50.143), 30 hops max, 46 byte packets 1 192.168.0.41 (192.168.0.41) 0.007 ms 0.005 ms 0.005 ms 2 192.168.0.42 (192.168.0.42) 0.182 ms 0.090 ms 0.097 ms 3 10.244.50.143 (10.244.50.143) 0.202 ms 0.126 ms 0.135 ms

~ # arp -a? (169.254.1.1) at ee:ee:ee:ee:ee:ee [ether] on eth0? (192.168.0.41) at ee:ee:ee:ee:ee:ee [ether] on eth0~ # ip routedefault via 169.254.1.1 dev eth0169.254.1.1 dev eth0 scope link

~ # hostnamenetshoot-nfs-pod2

~ # mtr -r -c 10 10.244.127.75Start: 2025-09-11T13:27:40+0000HOST: netshoot-nfs-pod2 Loss% Snt Last Avg Best Wrst StDev 1.|-- 192.168.0.42 0.0% 10 0.1 0.1 0.1 0.1 0.0 2.|-- 192.168.0.41 0.0% 10 0.3 0.3 0.2 0.3 0.0 3.|-- 10.244.127.75 0.0% 10 0.2 0.2 0.2 0.3 0.0

~ # traceroute 10.244.127.75traceroute to 10.244.127.75 (10.244.127.75), 30 hops max, 46 byte packets 1 192.168.0.42 (192.168.0.42) 0.010 ms 0.007 ms 0.008 ms 2 192.168.0.41 (192.168.0.41) 0.200 ms 0.083 ms 0.109 ms 3 10.244.127.75 (10.244.127.75) 0.226 ms 0.120 ms 0.086 ms

Deep Dive: Tracing a Pod-to-Pod Ping in Calico VXLAN

In this section we’ll take a single ping between two pods on different worker nodes and dissect every hop, every actor, and every synthetic trick Calico uses to make it work.

Let’s dive in.

[root@kubemaster ~]# kubectl get blockaffinities.crd.projectcalico.org -A \

-o jsonpath='{range .items[*]}{.spec.node}{“\t”}{.spec.cidr}{“\n”}{end}’ | sort

kubemaster.ranjeetbadhe.com 10.244.186.128/26kubeworker1.ranjeetbadhe.com 10.244.127.64/26kubeworker2.ranjeetbadhe.com 10.244.50.128/26

kubemaster.ranjeetbadhe.com 10.244.186.128/26

The master node has been allocated this/26block of pod IPs (range = 64 IPs).kubeworker1.ranjeetbadhe.com 10.244.127.64/26

Worker1 owns this/26pod subnet.kubeworker2.ranjeetbadhe.com 10.244.50.128/26

Worker2 owns this/26pod subnet.- This output is showing how Calico distributed pod IP ranges (/26 blocks) across your nodes. Each node owns one block of pod IPs

[root@kubemaster ~]# kubectl get blockaffinities -ANAME CREATED ATkubemaster.ranjeetbadhe.com-10-244-186-128-26 2025-09-08T18:07:22Zkubeworker1.ranjeetbadhe.com-10-244-127-64-26 2025-09-09T12:02:00Zkubeworker2.ranjeetbadhe.com-10-244-50-128-26 2025-09-09T10:58:56Z

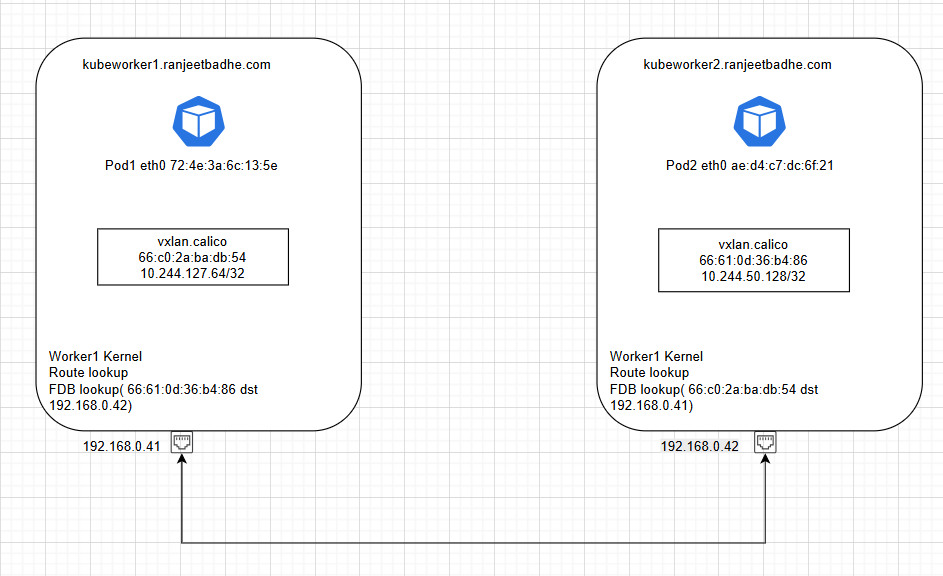

who are the actors

– Source: Pod1 10.244.127.75 on Worker1 (192.168.0.41)

– Destination: Pod2 10.244.50.143 on Worker2 (192.168.0.42)

Lets trace the packet’s path across veth pairs, host interfaces, VXLAN tunnels, and back.

Data Plane Actors on Worker1

[root@kubeworker1 ~]# arp -a_gateway (192.168.0.1) at 30:de:4b:fa:e2:b5 [ether] on ens192? (192.168.0.180) at b4:b6:86:ff:41:89 [ether] on ens192? (10.244.50.128) at 66:61:0d:36:b4:86 [ether] PERM on vxlan.calico? (192.168.0.40) at 30:de:4b:fa:00:01 [ether] on ens192? (192.168.0.30) at 00:0c:29:8a:7a:9b [ether] on ens192? (10.244.127.75) at 72:4e:3a:6c:13:5e [ether] on cali083129b4ed0? (10.244.186.128) at 66:21:c3:1b:bf:6a [ether] PERM on vxlan.calico

[root@kubeworker1 ~]# bridge fdb show dev vxlan.calico | grep dst66:21:c3:1b:bf:6a dst 192.168.0.40 self permanent66:61:0d:36:b4:86 dst 192.168.0.42 self permanent

[root@kubeworker1 ~]# ip -br link | egrep 'cali|vxlan'cali083129b4ed0@if2 UP ee:ee:ee:ee:ee:eecali4fecdc12ab9@if2 UP ee:ee:ee:ee:ee:eevxlan.calico UNKNOWN 66:c0:2a:ba:db:54

– Pod1 eth0@if3 – 72:4e:3a:6c:13:5e

– Host veth peer – cali083129b4ed0@if2, MAC ee:ee:ee:ee:ee:ee

– VXLAN device – vxlan.calico, MAC 66:c0:2a:ba:db:54, VTEP IP 10.244.127.64/32

– Physical NIC – ens192, MAC 00:0c:29:33:70:1f, node IP 192.168.0.41

Calico uses that block base as a synthetic next hop on other nodes’ vxlan.calico.

Data Plane Actors on Worker2

[root@kubeworker2 ~]# bridge fdb show dev vxlan.calico | grep dst66:c0:2a:ba:db:54 dst 192.168.0.41 self permanent66:21:c3:1b:bf:6a dst 192.168.0.40 self permanent

[root@kubeworker2 ~]# ip -br link | egrep 'cali|vxlan'vxlan.calico UNKNOWN 66:61:0d:36:b4:86calif5b1e6b1e5d@if2 UP ee:ee:ee:ee:ee:eecalif33d1421500@if2 UP ee:ee:ee:ee:ee:eecalib6390c15d96@if2 UP ee:ee:ee:ee:ee:ee

[root@kubeworker2 ~]# arp -a? (192.168.0.30) at 00:0c:29:8a:7a:9b [ether] on ens192? (10.244.50.144) at a2:29:d7:49:fa:74 [ether] on calib6390c15d96? (192.168.0.180) at b4:b6:86:ff:41:89 [ether] on ens192? (10.244.127.64) at 66:c0:2a:ba:db:54 [ether] PERM on vxlan.calico? (192.168.0.40) at 30:de:4b:fa:00:01 [ether] on ens192? (192.168.0.41) at 00:0c:29:33:70:1f [ether] on ens192? (10.244.186.128) at 66:21:c3:1b:bf:6a [ether] PERM on vxlan.calico? (10.244.50.143) at ae:d4:c7:dc:6f:21 [ether] on calif33d1421500_gateway (192.168.0.1) at 30:de:4b:fa:e2:b5 [ether] on ens192

– VXLAN device – vxlan.calico, MAC 66:61:0d:36:b4:86, VTEP IP 10.244.50.128/32

– Host veth peer – calif33d1421500@if2

– Pod2 eth0@if7 – ae:d4:c7:dc:6f:21

– Physical NIC – ens192, MAC 00:0c:29:30:a3:71, node IP 192.168.0.42

Routing & Control Plane Hints

Node block affinities:

– Worker1 → 10.244.127.64/26

– Worker2 → 10.244.50.128/26

The Packet Journey: Pod1 → Pod2

1. Inside Pod1

default via 169.254.1.1

2. Host veth (Worker1)

Pod1’s packet enters host via cali083129b4ed0.

3. Routing Decision

Destination 10.244.50.143 lies in Worker2’s /26 block → forward via VXLAN.

4. VXLAN Encapsulation (Worker1)

Inner L3: 10.244.127.75 → 10.244.50.143

Outer L2: 66:c0:2a:ba:db:54 → 66:61:0d:36:b4:86

Outer L3: 192.168.0.41 → 192.168.0.42

Protocol: UDP/4789

5. Decapsulation (Worker2)

VXLAN header stripped, inner packet revealed.

6. Host veth (Worker2)

Delivered via calif33d1421500 to Pod2’s eth0.

7. Reply Path

Pod2 responds with ICMP echo-reply → exact reverse flow.

The Mystery of 169.254.1.1

Pods are assigned /32 IPs. To avoid ARP and force routing, Calico injects a synthetic gateway:default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

This ensures all pod traffic goes through the host for policy and routing.

What is the FDB?

The Forwarding Database (FDB) is the Linux bridge’s MAC → next-hop mapping table. Calico uses it for VXLAN peer mapping:

Think of it like an ‘address book’ for MAC-to-node mappings.

bridge fdb show dev vxlan.calico

66:61:0d:36:b4:86 dst 192.168.0.42 self permanent

– vxlan.calico exists on every node.

– /32 VTEP IPs come from each node’s pod block.

– FDB ties remote VTEP MACs → underlay IPs.

– 169.254.1.1 is a synthetic hop.

– Pod-to-pod latency across VXLAN ≈ 0.2–0.4 ms.