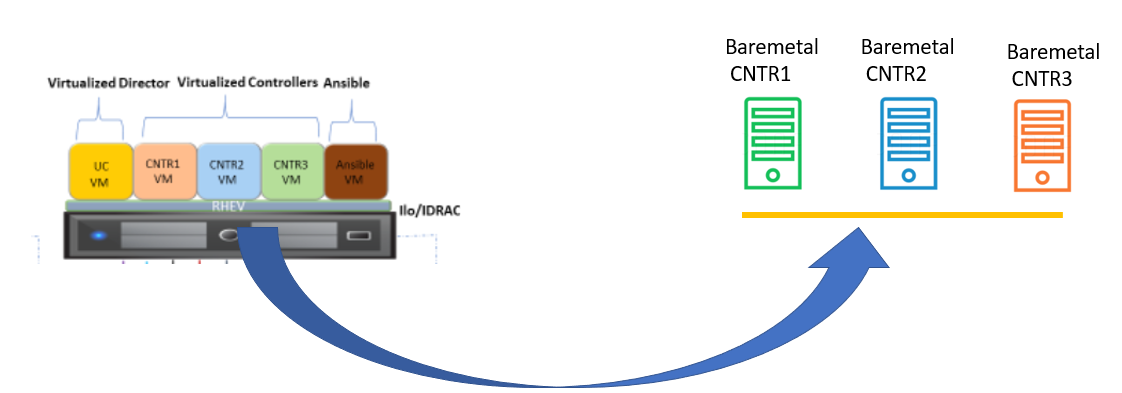

Recently I was working for a customer in Asia Pacific region in “Technology Strategy” department for building 5g core network (Kubernetes cluster) on Openstack 16.2. “Chief Technology Officer (CTO)” requested us to evaluate options for deploying Openstack controllers on physical baremetal node, a methodology to migrate from virtual to baremetal node. Through our lab trials we were able to develop a methodology to accomplish these tasks .In previous blog we saw the deployment of RHOPS 16.2 with virtualized controllers. In this blog I will provide exact steps and strategy to migrate virtual controller to baremetal physical controllers.

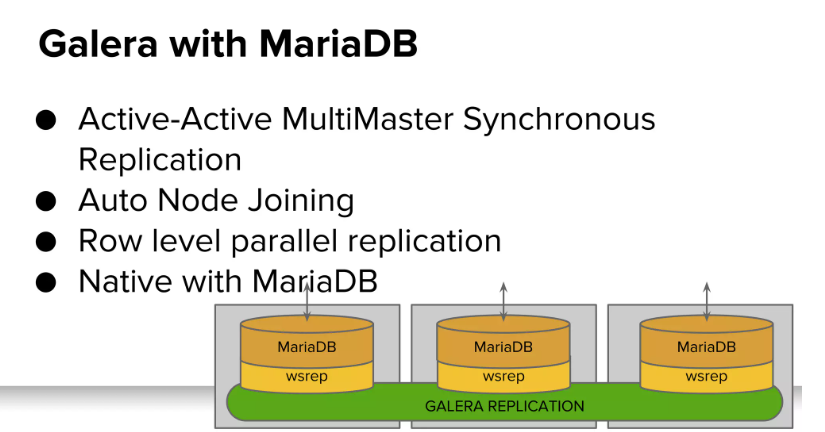

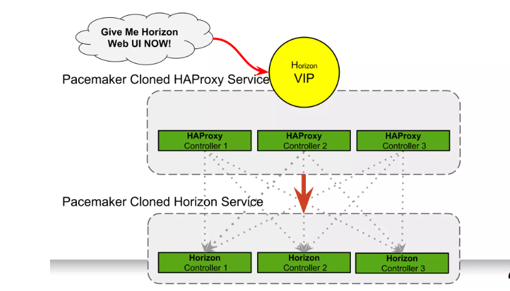

We are going to deal with the terms Rabbitmq, Galera, Redis, ha-proxy bundle, Corosync, Stonith and Fencing. Before attempting migration activity administrator needs to know at least on high level.

How Pacemaker manages high availability, how Fencing is achieved with STONITH, How HA Proxy does load balancing, How DB replication is done with Galera.

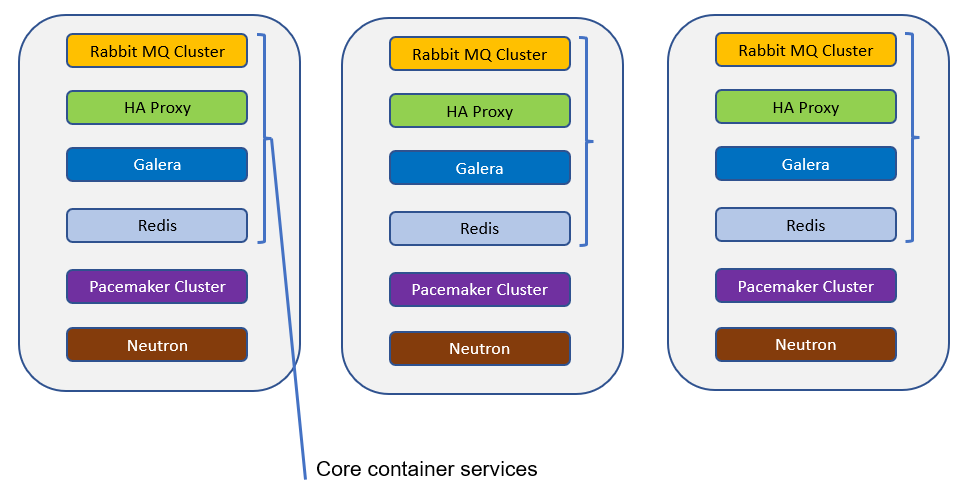

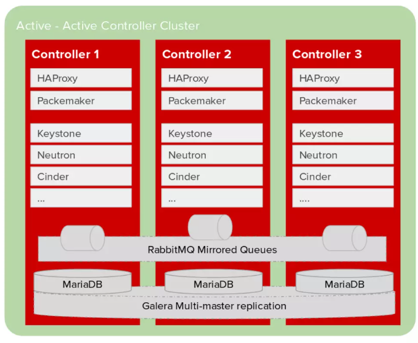

Red Hat OpenStack Platform (RHOSP) employs several technologies to provide the services required to implement high availability (HA). These services include Galera, RabbitMQ, Redis, HAProxy,individual services that Pacemaker manages, and Systemd and plain container services that Podman manages.Core container services are Galera, RabbitMQ, Redis, and HAProxy. These services run on all Controller nodes and require specific management and constraints for the start, stop and restart actions. You use Pacemaker to launch, manage, and troubleshoot core container services.

Pacemaker manages the following resource types:

Bundle:

A bundle resource configures and replicates the same container on all Controller nodes, maps the necessary storage paths to the container directories, and sets specific attributes related to the resource itself.

Container:

A container can run different kinds of resources, from simple systemd services like HAProxy to complex services like Galera, which requires specific resource agents that control and set the state of the service on the different nodes. Corosync provides reliable communication between nodes, manages cluster membership and determines quorum. Pacemaker is a cluster resource manager (CRM) that manages the resources that make up the cluster, such as IP addresses, mount points, file systems, DRBD devices, services such as MySQL.

Fencing is the process of isolating a node to protect a cluster and its resources.

STONITH is an acronym for “Shoot The Other Node In The Head” and it protects your data from being corrupted by rogue nodes or concurrent access.Director uses Pacemaker to provide a highly available cluster of Controller nodes. Pacemaker uses a process called STONITH to fence failed nodes.If a Controller node fails a health check, the Controller node that acts as the Pacemaker designated coordinator (DC), uses the Pacemaker stonith service to fence the impacted Controller node. STONITH is disabled by default and requires manual configuration so that Pacemaker can control the power management of each node in the cluster

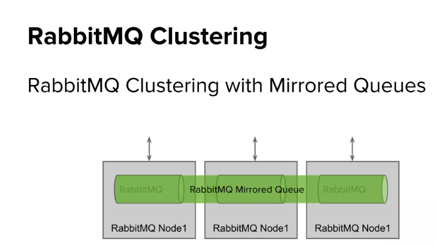

RabbitMQ uses the concept of Mirrored Queues. RabbitMQ’s High Availability is achieved by mirroring the queues across different RabbitMQ Nodes in a cluster. Mirrored Queue enables to implement high availability of messages in the RabbitMQ Cluster. When a queue is created on a node in a cluster, the node where queue resides is master node.

With RabbitMQ High Availability for that particular queue, a Mirrored Queue will be created in the other nodes of the cluster. The messages in the master queue will be synched with the mirror queue in other nodes. Although mirror queues exist, all the publish and consumed requests will be directed only to the master queue. Even if the publisher sends the request directly to the mirror queue, the request will be directed to the node which has the master queue. RabbitMQ does this process to maintain message integrity between the master queue and mirrored queues

COROSYNC/PACEMAKER

Pacemaker is a Cluster Resource Manager (CRM). It starts and stops services contains logic for ensuring both that they are running, and that they are only running in one location (to avoid data corruption).

Corosync provides the messaging layer and talks to instances of itself on the other node(s). Corosync provides reliable communication between nodes, manages cluster membership and determines quorum.

Every OpenStack controller runs HAProxy, which manages a single External Virtual IP (VIP) for all controller nodes and provides HTTP and TCP load balancing of requests going to OpenStack API services, RabbitMQ, and MySQL.

When an end user accesses the OpenStack cloud using Horizon or makes a request to the REST API for services such as nova-api, glance-api, keystone-api, neutron-api, nova-scheduler or MySQL, the request goes to the live controller node currently holding the External VIP, and the connection gets terminated by HAProxy. When the next request comes in, HAProxy handles it, and may send it to the original controller or another in the environment, depending on load conditions.

Each of the services housed on the controller nodes has its own mechanism for achieving HA:

OpenStack services, such as nova-api, glance-api, keystone-api, neutron-api, nova-scheduler, cinder-api are stateless services that do not require any special attention besides load balancing.

Horizon, as a typical web application, requires sticky sessions to be enabled at the load balancer.

RabbitMQ provides active/active high availability using mirrored queues and is deployed with custom resource agent scripts for Pacemaker.

MySQL high availability is achieved through Galera deployment and custom resource agent scripts for Pacemaker. Please, note that HAProxy configures MySQL backends as active/passive because OpenStack support for multi-node writes to Galera nodes is not production ready yet.

Neutron agents are active/passive and are managed by custom resource agent scripts for Pacemaker.Now we have understood the main components running on Controller node lets go ahead and perform the migration step by step.

My controllers control plane ip address are

192.168.6.121 (Already migrated)

192.168.6.122 ( We are migrating)

192.168.6.123. Today we migrate the controller node with ip address 192.168.6.122 .Lets move forward with the steps.

- Check the status of Pacemaker on the running Controller nodes

(undercloud) [stack@director ~]$ ssh heat-admin@192.168.6.122 'sudo pcs status'

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC: melbourne-controller-2 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Tue Jul 6 19:41:09 2022

* Last change: Tue Jul 6 03:26:46 2022 by root via cibadmin onmelbourne02-overcloud-controller-0

* 12 nodes configured

* 35 resource instances configured

Node List:

* Online: [ melbourne-controller-1 melbourne-controller-2melbourne02-overcloud-controller-0 ]

* GuestOnline: [ galera-bundle-0@melbourne-controller-1 galera-bundle-1@melbourne-controller-2 galera-bundle-2@melbourne01bng02-overcloud-controller-0 rabbitmq-bundle-0@melbourne-controller-2 rabbitmq-bundle-1@melbourne-controller-1 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-0@melbourne-controller-1 redis-bundle-1@melbourne-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest]:

* galera-bundle-0 (ocf::heartbeat:galera): Master melbourne-controller-1

* galera-bundle-1 (ocf::heartbeat:galera): Master melbourne-controller-2

* galera-bundle-2 (ocf::heartbeat:galera): Master melbourne02-overcloud-controller-0

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Started melbourne-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Started melbourne-controller-1

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Slave melbourne-controller-1

* redis-bundle-1 (ocf::heartbeat:redis): Master melbourne-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Started melbourne02-overcloud-controller-0

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Started melbourne02-overcloud-controller-0

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Started melbourne-controller-1

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Started melbourne-controller-1

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Started melbourne-controller-2

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

(undercloud) [stack@director templates]$ openstack server list -c Name -c Networks

+-------------------------------------+------------------------+

| Name | Networks |

+-------------------------------------+------------------------+

|melbourne02-overcloud-controller-0 | ctlplane=192.168.6.121 |

|melbourne16-overcloud-compute-6 | ctlplane=192.168.6.19 |

|melbourne17-overcloud-compute-5 | ctlplane=192.168.6.26 |

|melbourne07-overcloud-computemme-1 | ctlplane=192.168.6.20 |

|melbourne08-overcloud-computemme-0 | ctlplane=192.168.6.27 |

|melbourne11-overcloud-compute-4 | ctlplane=192.168.6.14 |

|melbourne10-overcloud-compute-3 | ctlplane=192.168.6.28 |

|melbourne09-overcloud-compute-2 | ctlplane=192.168.6.31 |

|melbourne06-overcloud-computesriov-1 | ctlplane=192.168.6.23 |

|melbourne05-overcloud-computesriov-0 | ctlplane=192.168.6.11 |

|melbourne-controller-2 | ctlplane=192.168.6.123 |

|melbourne-controller-1 | ctlplane=192.168.6.122 |

|melbourne14-overcloud-compute-0 | ctlplane=192.168.6.32 |

|melbourne15-overcloud-compute-1 | ctlplane=192.168.6.29 |

- Check the following parameters on each node of the overcloud MariaDB cluster:

- wsrep_local_state_comment: Synced

- wsrep_cluster_size:

(undercloud) [stack@director ~]$ for i in 192.168.6.121 192.168.6.122 192.168.6.123 ; do echo "*** $i ***" ; ssh heat-admin@$i "sudo podman exec \$(sudo podman ps --filter name=galera-bundle -q) mysql -e \"SHOW STATUS LIKE 'wsrep_local_state_comment'; SHOW STATUS LIKE 'wsrep_cluster_size';\""; done

*** 192.168.6.121 ***

******************

Variable_name Value

wsrep_local_state_comment Synced

Variable_name Value

wsrep_cluster_size 3

*** 192.168.6.122 ***

The authenticity of host '192.168.6.122 (192.168.6.122)' can't be established.

ECDSA key fingerprint is SHA256:VpLCVML7FyrxLxaixohlxd9wBrU1+JpgM0LycD1qIog.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.6.122' (ECDSA) to the list of known hosts.

Variable_name Value

wsrep_local_state_comment Synced

Variable_name Value

wsrep_cluster_size 3

*** 192.168.6.123 ***

*******************************************************************************

Variable_name Value

wsrep_local_state_comment Synced

Variable_name Value

wsrep_cluster_size 3

- Check the RabbitMQ status

(undercloud) [stack@director ~]$ ssh heat-admin@192.168.6.123 "sudo podman exec \$(sudo podman ps -f name=rabbitmq-bundle -q) rabbitmqctl cluster_status"

*******************************************************************************

Cluster status of node rabbit@melbourne-controller-2 ...

[{nodes,[{disc,['rabbit@melbourne-controller-2',

'rabbit@melbourne01bng02-overcloud-controller-0',

'rabbit@melbourne01bng04-overcloud-controller-1']}]},

{running_nodes,['rabbit@melbourne01bng04-overcloud-controller-1',

'rabbit@melbourne01bng02-overcloud-controller-0',

'rabbit@melbourne-controller-2']},

{cluster_name,<<"rabbit@melbourne-controller-2.localdomain">>},

{partitions,[]},

{alarms,[{'rabbit@melbourne01bng04-overcloud-controller-1',[]},

{'rabbit@melbourne01bng02-overcloud-controller-0',[]},

{'rabbit@melbourne-controller-2',[]}]}]

- Check whether fencing is disabled

(undercloud) [stack@director ~]$ ssh heat-admin@192.168.6.122 "sudo pcs property show stonith-enabled"

Cluster Properties:

stonith-enabled: false

Run the following command to disable fencing:

(undercloud) $ ssh heat-admin@192.168.6.122 "sudo pcs property set stonith-enabled=false"

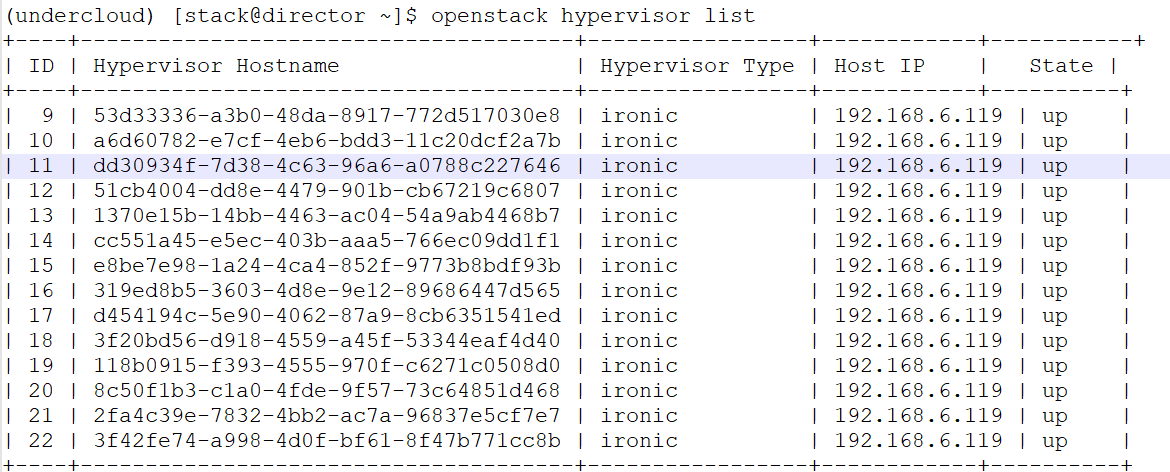

- Check the Compute services are active on the director node:

(undercloud) [stack@director ~]$ openstack hypervisor list

- Remove the node from the cluster, consider this node is not reachable so the options skip offline and force are required.

(undercloud) [stack@director templates]$ openstack server list -c Name -c Networks

+-------------------------------------+------------------------+

| Name | Networks |

+-------------------------------------+------------------------+

|melbourne02-overcloud-controller-0 | ctlplane=192.168.6.121 |

|melbourne16-overcloud-compute-6 | ctlplane=192.168.6.19 |

|melbourne17-overcloud-compute-5 | ctlplane=192.168.6.26 |

|melbourne07-overcloud-computemme-1 | ctlplane=192.168.6.20 |

|melbourne08-overcloud-computemme-0 | ctlplane=192.168.6.27 |

|melbourne11-overcloud-compute-4 | ctlplane=192.168.6.14 |

|melbourne10-overcloud-compute-3 | ctlplane=192.168.6.28 |

|melbourne09-overcloud-compute-2 | ctlplane=192.168.6.31 |

|melbourne06-overcloud-computesriov-1 | ctlplane=192.168.6.23 |

|melbourne05-overcloud-computesriov-0 | ctlplane=192.168.6.11 |

|melbourne-controller-2 | ctlplane=192.168.6.123 |

|melbourne-controller-1 | ctlplane=192.168.6.122 |

|melbourne14-overcloud-compute-0 | ctlplane=192.168.6.32 |

|melbourne15-overcloud-compute-1 | ctlplane=192.168.6.29 |

+-------------------------------------+------------------------+

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs cluster node remove melbourne-controller-1 --skip-offline --force"

Destroying cluster on hosts: 'melbourne-controller-1'...

melbourne-controller-1: Successfully destroyed cluster

Sending updated corosync.conf to nodes...

melbourne-controller-2: Succeeded

melbourne01bng02-overcloud-controller-0: Succeeded

melbourne01bng02-overcloud-controller-0: Corosync configuration reloaded

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs status"

*******************************************************************************

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC: melbourne-controller-2 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Wed Jul 7 00:22:25 2022

* Last change: Wed Jul 7 00:21:37 2022 by hacluster via crm_node on melbourne-controller-2

* 11 nodes configured

* 35 resource instances configured

Node List:

* Online: [ melbourne-controller-2melbourne02-overcloud-controller-0 ]

* GuestOnline: [ galera-bundle-1@melbourne-controller-2 galera-bundle-2@melbourne01bng02-overcloud-controller-0 rabbitmq-bundle-0@melbourne-controller-2 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-1@melbourne-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest]:

* galera-bundle-0 (ocf::heartbeat:galera): Stopped

* galera-bundle-1 (ocf::heartbeat:galera): Master melbourne-controller-2

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Started melbourne-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Stopped

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Stopped

* redis-bundle-1 (ocf::heartbeat:redis): Master melbourne-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Stopped

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Started melbourne-controller-2

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 on melbourne-controller-2 'error' (1): call=175, status='complete', exitreason='podman failed to launch container', last-rc-change='2022-12-06 01:20:22 +10:00', queued=0ms, exec=6798ms

* openstack-cinder-volume-podman-0_monitor_60000 onmelbourne02-overcloud-controller-0 'not running' (7): call=4979, status='complete', exitreason='', last-rc-change='2022-12-07 00:21:35 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

- After you remove the old node from the pacemaker cluster, remove the node from the list of known hosts in pacemaker. Run this command whether the node is reachable or not

Run in an active node:

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs host deauth melbourne-controller-1"

- The overcloud database must continue to run during the replacement procedure. To ensure that Pacemaker does not stop Galera during this procedure, select a running Controller node and run the following command on the undercloud with the IP address of the Controller node:

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs resource unmanage galera-bundle"

*******

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs status"

*******

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC: melbourne-controller-2 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Wed Jul 7 00:24:43 2022

* Last change: Wed Jul 7 00:24:33 2022 by root via cibadmin on melbourne-controller-2

* 11 nodes configured

* 35 resource instances configured

Node List:

* GuestNode galera-bundle-1@melbourne-controller-2: maintenance

* GuestNode galera-bundle-2@melbourne01bng02-overcloud-controller-0: maintenance

* Online: [ melbourne-controller-2melbourne02-overcloud-controller-0 ]

* GuestOnline: [ rabbitmq-bundle-0@melbourne-controller-2 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-1@melbourne-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest] (unmanaged):

* galera-bundle-0 (ocf::heartbeat:galera): Stopped (unmanaged)

* galera-bundle-1 (ocf::heartbeat:galera): Master melbourne-controller-2 (unmanaged)

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0 (unmanaged)

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Started melbourne-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Stopped

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Stopped

* redis-bundle-1 (ocf::heartbeat:redis): Master melbourne-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Stopped

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Started melbourne-controller-2

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 on melbourne-controller-2 'error' (1): call=175, status='complete', exitreason='podman failed to launch container', last-rc-change='2022-12-06 01:20:22 +10:00', queued=0ms, exec=6798ms

* openstack-cinder-volume-podman-0_monitor_60000 onmelbourne02-overcloud-controller-0 'not running' (7): call=4991, status='complete', exitreason='', last-rc-change='2022-12-07 00:24:41 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

- Validate that the galera cluster is now unmanaged:

(undercloud) [stack@director templates]$ ssh heat-admin@192.168.6.123 "sudo pcs status"

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC: melbourne-controller-2 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Wed Jul 7 00:24:43 2022

* Last change: Wed Jul 7 00:24:33 2022 by root via cibadmin on melbourne-controller-2

* 11 nodes configured

* 35 resource instances configured

Node List:

* GuestNode galera-bundle-1@melbourne-controller-2: maintenance

* GuestNode galera-bundle-2@melbourne01bng02-overcloud-controller-0: maintenance

* Online: [ melbourne-controller-2melbourne02-overcloud-controller-0 ]

* GuestOnline: [ rabbitmq-bundle-0@melbourne-controller-2 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-1@melbourne-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest] (unmanaged):

* galera-bundle-0 (ocf::heartbeat:galera): Stopped (unmanaged)

* galera-bundle-1 (ocf::heartbeat:galera): Master melbourne-controller-2 (unmanaged)

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0 (unmanaged)

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Started melbourne-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Stopped

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Stopped

* redis-bundle-1 (ocf::heartbeat:redis): Master melbourne-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Started melbourne-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Stopped

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Started melbourne-controller-2

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 on melbourne-controller-2 'error' (1): call=175, status='complete', exitreason='podman failed to launch container', last-rc-change='2022-12-06 01:20:22 +10:00', queued=0ms, exec=6798ms

* openstack-cinder-volume-podman-0_monitor_60000 onmelbourne02-overcloud-controller-0 'not running' (7): call=4991, status='complete', exitreason='', last-rc-change='2022-12-07 00:24:41 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

- Power off the virtual controller node:

[heat-admin@melbourne-controller-1 ~]$ sudo poweroff

Connection to 192.168.6.122 closed by remote host.

Connection to 192.168.6.122 closed.

- Now replacement of the node starts

(undercloud) [stack@director templates]$ source ~/stackrc

(undercloud) [stack@director templates]$ INSTANCE=$(openstack server list --name melbourne-controller-1 -f value -c ID)

(undercloud) [stack@director templates]$ NODE=$(openstack baremetal node list -f csv --quote minimal | grep $INSTANCE | cut -f1 -d,)

(undercloud) [stack@director templates]$ openstack baremetal node maintenance set $NODE

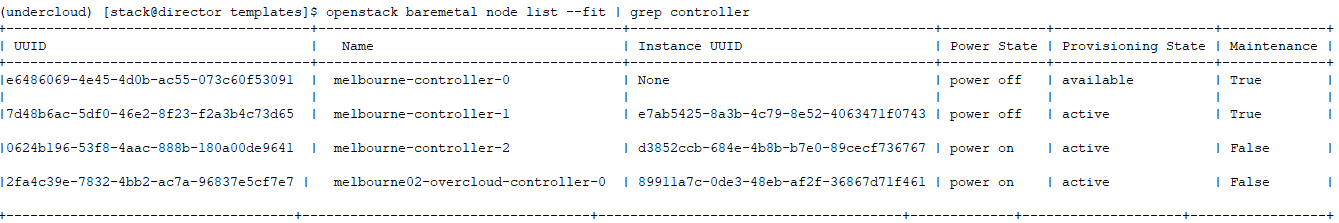

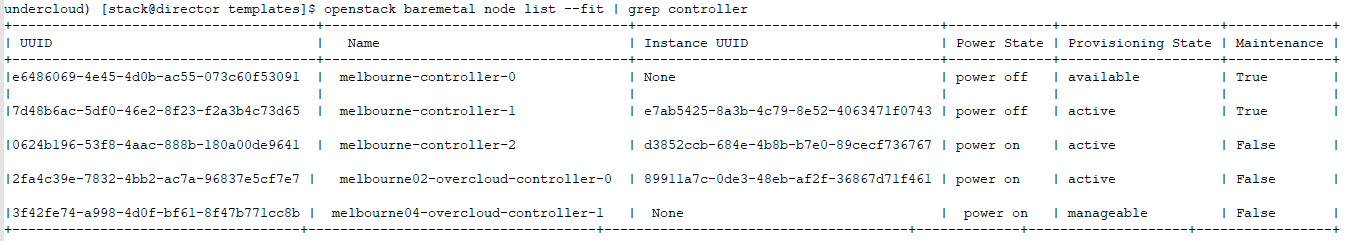

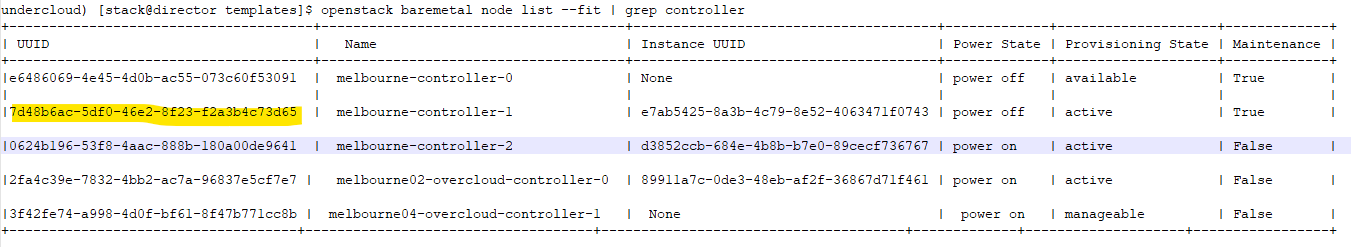

(undercloud) [stack@director templates]$ openstack baremetal node list –fit

- Introspect the baremetal node on the OSP16 director with the proper information, store this information in a new file named controller-instackenv.yaml, we ensure to use the right index value in the capabilities.

(undercloud) [stack@director golden]$ cat controller_nodes.json

{

"nodes":[

{

"mac": [

"31:e1:71:5d:eb:9d"

],

"name": "melbourne01bng02-overcloud-controller-0",

"capabilities": "node:controller-3,profile:control,boot_option:local",

"pm_addr": "172.20.21.5",

"arch":"x86_64",

"pm_password": "Bharat123",

"pm_type": "pxe_ilo",

"pm_user": "root"

},

{

"mac": [

"31:e1:71:5d:fb:49"

],

"name": "melbourne01bng04-overcloud-controller-1",

"capabilities": "node:controller-4,profile:control,boot_option:local",

"pm_addr": "172.20.21.7",

"arch":"x86_64",

"pm_password": "Bharat123",

"pm_type": "pxe_ilo",

"pm_user": "root"

}

]

}

- Update the node-info.yaml and predicatable_ips.yaml

(undercloud) [stack@director templates]$ cat node-info.yaml

parameter_defaults:

OvercloudControllerFlavor: control

OvercloudComputeFlavor: compute

ControllerCount: 3

ComputeCount: 7

ComputemmeCount: 2

ComputeSriovCount: 2

ComputeSriovLargeCount: 0

ComputeExtraConfig:

nova::cpu_allocation_ratio: '1.0'

nova::compute::force_config_drive: false

ControllerSchedulerHints:

'capabilities:node': 'controller-%index%'

ComputeSriovSchedulerHints:

'capabilities:node': 'computesriov-%index%'

ComputeSriovLargeSchedulerHints:

'capabilities:node': 'computesriovlarge-%index%'

ComputeSchedulerHints:

'capabilities:node': 'compute-%index%'

ComputemmeSchedulerHints:

'capabilities:node': 'computemme-%index%'

HostnameMap:

overcloud-controller-0: removed-controller-0

overcloud-controller-1: removed-controller-1

overcloud-controller-2: melbourne-controller-2

overcloud-controller-3:melbourne02-overcloud-controller-0

overcloud-controller-4:melbourne04-overcloud-controller-1

(undercloud) [stack@director templates]$ cat predictable_ips.yaml

parameter_defaults:

ControllerIPs:

ctlplane:

- deleted

- deleted

- 192.168.6.123

- 192.168.6.121

- 192.168.6.122

external:

- deleted

- deleted

- 172.20.21.123

- 172.20.21.121

- 172.20.21.122

internal_api:

- deleted

- deleted

- 172.16.20.123

- 172.16.20.121

- 172.16.20.122

tenant:

- deleted

- deleted

- 10.2.1.123

- 10.2.1.121

- 10.2.1.122

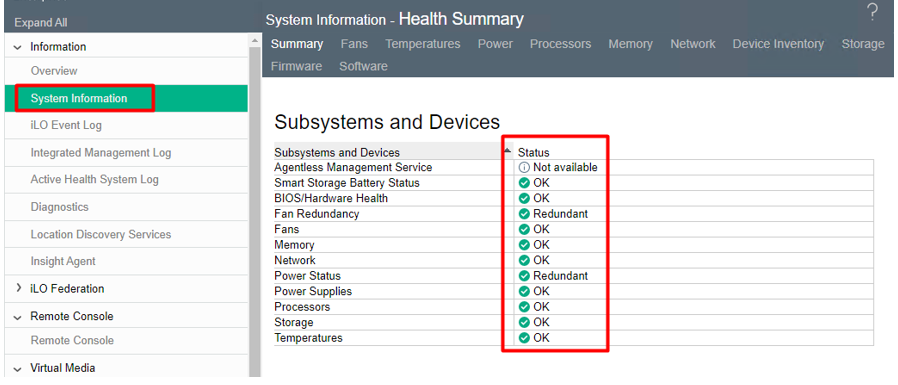

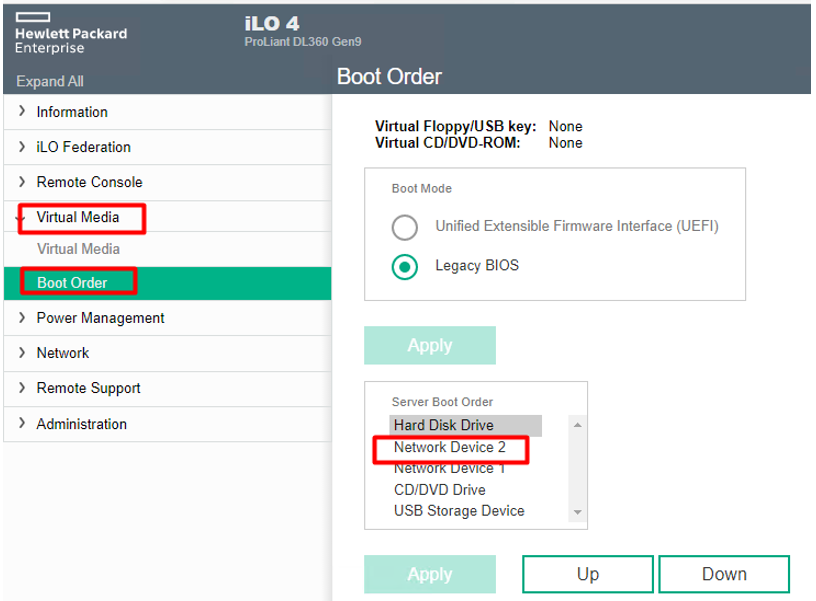

- Hardware validation

Verify through the iLO Web Console if the status of all devices is Ok

- We Verify if one of the first boot devices is Network Device 2, this device is mapped to the Provisioning NIC for PXE Boot

- Import the baremetal node

(undercloud) [stack@director ]$ openstack overcloud node import controller_nodes.json

Waiting for messages on queue 'tripleo' with no timeout.

1 node(s) successfully moved to the "manageable" state.

Successfully registered node UUID 2fa4c39e-7832-4bb2-ac7a-96837e5cf7e7

Successfully registered node UUID 3f42fe74-a998-4d0f-bf61-8f47b771cc8b

- Introspect the imported node, double check that node is in manageable state and that this is the node you want to integrate to the cluster.

openstack overcloud node introspect --all-manageable --provide

- Determine the UUID of the node that you want to remove and store it in the NODEID variable. Identify the Heat resource ID to use as the node index. Ensure that you replace NODE_NAME with the name of the node that you want to remove:

(undercloud) [stack@director ]$ NODE=melbourne-controller-1

(undercloud) [stack@director ]$ NODEID=$(openstack server list -f value -c ID --name $NODE)

(undercloud) [stack@director golden]$ openstack stack resource show overcloud ControllerServers -f json -c attributes | jq --arg NODEID "$NODEID" -c '.attributes.value | keys[] as $k | if .[$k] == $NODEID then "Node index \($k) for \(.[$k])" else empty end'

"Node index 1 for e7ab5425-8a3b-4c79-8e52-4063471f0743"

- Create the following environment file ~/templates/remove-controller.yaml and include the node index of the Controller node that you want to remove:

parameters:

ControllerRemovalPolicies:

[{'resource_list': ['2']}]

- Note: the index that is put in this file is deleted and not used anymore, however IPs and hostnames are going to be the same. This file is used only once when a specific controller is removed.

- We have already updated the node info and predictable yaml files. Delete the Control Plane port from the virtual controller to be replaced

(undercloud) [stack@director templates]$ openstack port list | fgrep 192.168.6.123

| 220a7af0-55a3-4236-93a0-cf9bad6c4432 | Controller-port-0 | 52:54:00:f1:e6:9a | ip_address='192.168.6.123', subnet_id='cd9c1791-1d04-4c19-89d3-f2451f44325189' | ACTIVE |

(undercloud) [stack@director templates]$ openstack port delete 220a7af0-55a3-4236-93a0-cf9bad6c4432

(undercloud) [stack@director templates]$ openstack port list | fgrep 192.168.6.123

- For the first and the second Controller to migrate

Fix nic layout configurations for baremetal nodes with sed in Ansible Playbooks during the deployment

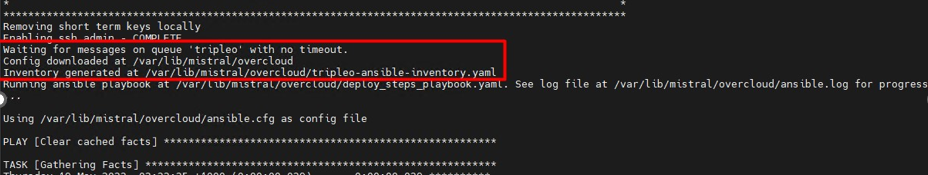

When you run the deployment (sh scripts/overcloud-deploy.sh), the virtual controller configuration is applied to the new baremetal controllers. To avoid this, during the running you must to wait when the playbooks are finished and fix the configurations in the file /var/lib/mistral/overcloud/Controller/{{xxx-overcloud-controller-X}}/NetworkConfig

To monitor the deployment and know when to run the solution, you have two options:

- Wait watching the log until the message:

“Config downloaded at /var/lib/mistral/overcloud/”

- Monitor file changes by looking for interfaces corresponding to virtual controllers, such as ens3 (ctlplane iface). When you see any output from the following command, you must run the fix.

# watch fgrep ens3 /var/lib/mistral/overcloud/Controller/*-overcloud-controller-*/*

Commands to fix the Playbooks:

$ sudo -i

# cd /var/lib/mistral/overcloud/Controller/ ; \

sed -i 's/ens3/eno1/g' *-overcloud-controller-*/NetworkConfig; \

sed -i 's/ens4/eno2/g' *-overcloud-controller-*/NetworkConfig; \

sed -i 's/ens6/eno50/g' *-overcloud-controller-*/NetworkConfig; \

sed -i 's/ens5/eno3/g' *-overcloud-controller-*/NetworkConfig; \

sed -i 's/ens7/eno51/g' *-overcloud-controller-*/NetworkConfig

To check

fgrep ens3 /var/lib/mistral/overcloud/Controller/*-overcloud-controller-*/*

- Clean up after Controller node replacement

[heat-admin@melbourne01bng03-overcloud-controller-2 ~]$ sudo pcs resource manage galera-bundle

[heat-admin@melbourne01bng03-overcloud-controller-2 ~]$ sudo pcs status

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC:melbourne02-overcloud-controller-0 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Thu Jul 8 02:19:07 2022

* Last change: Thu Jul 8 02:19:03 2022 by root via cibadmin on melbourne03-overcloud-controller-2

* 12 nodes configured

* 35 resource instances configured

Node List:

* Online: [melbourne02-overcloud-controller-0melbourne03-overcloud-controller-2melbourne04-overcloud-controller-1 ]

* GuestOnline: [ galera-bundle-0@melbourne01bng04-overcloud-controller-1 galera-bundle-1@melbourne01bng03-overcloud-controller-2 galera-bundle-2@melbourne01bng02-overcloud-controller-0 rabbitmq-bundle-0@melbourne01bng03-overcloud-controller-2 rabbitmq-bundle-1@melbourne01bng04-overcloud-controller-1 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-0@melbourne01bng04-overcloud-controller-1 redis-bundle-1@melbourne01bng03-overcloud-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest]:

* galera-bundle-0 (ocf::heartbeat:galera): Mastermelbourne04-overcloud-controller-1

* galera-bundle-1 (ocf::heartbeat:galera): Stoppedmelbourne03-overcloud-controller-2

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne03-overcloud-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne04-overcloud-controller-1

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Mastermelbourne04-overcloud-controller-1

* redis-bundle-1 (ocf::heartbeat:redis): Slavemelbourne03-overcloud-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne03-overcloud-controller-2

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Startedmelbourne04-overcloud-controller-1

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne03-overcloud-controller-2

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 onmelbourne02-overcloud-controller-0 'error' (1): call=5420, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-07 02:12:33 +10:00', queued=0ms, exec=38732ms

* openstack-cinder-volume-podman-0_start_0 onmelbourne04-overcloud-controller-1 'error' (1): call=5626, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-08 02:04:07 +10:00', queued=0ms, exec=9295ms

* openstack-cinder-volume-podman-0_monitor_60000 on melbourne03-overcloud-controller-2 'not running' (7): call=111, status='complete', exitreason='', last-rc-change='2022-12-08 02:18:05 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[heat-admin@melbourne01bng03-overcloud-controller-2 ~]$ sudo pcs status

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC:melbourne02-overcloud-controller-0 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Thu Jul 8 02:19:13 2022

* Last change: Thu Jul 8 02:19:03 2022 by root via cibadmin onmelbourne03-overcloud-controller-2

* 12 nodes configured

* 35 resource instances configured

Node List:

* Online: [melbourne02-overcloud-controller-0melbourne03-overcloud-controller-2melbourne04-overcloud-controller-1 ]

* GuestOnline: [ galera-bundle-0@melbourne01bng04-overcloud-controller-1 galera-bundle-1@melbourne01bng03-overcloud-controller-2 galera-bundle-2@melbourne01bng02-overcloud-controller-0 rabbitmq-bundle-0@melbourne01bng03-overcloud-controller-2 rabbitmq-bundle-1@melbourne01bng04-overcloud-controller-1 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-0@melbourne01bng04-overcloud-controller-1 redis-bundle-1@melbourne01bng03-overcloud-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest]:

* galera-bundle-0 (ocf::heartbeat:galera): Mastermelbourne04-overcloud-controller-1

* galera-bundle-1 (ocf::heartbeat:galera): Startingmelbourne03-overcloud-controller-2

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne03-overcloud-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne04-overcloud-controller-1

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Mastermelbourne04-overcloud-controller-1

* redis-bundle-1 (ocf::heartbeat:redis): Slavemelbourne03-overcloud-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne03-overcloud-controller-2

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Startedmelbourne04-overcloud-controller-1

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): FAILEDmelbourne03-overcloud-controller-2

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 onmelbourne02-overcloud-controller-0 'error' (1): call=5420, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-07 02:12:33 +10:00', queued=0ms, exec=38732ms

* openstack-cinder-volume-podman-0_start_0 onmelbourne04-overcloud-controller-1 'error' (1): call=5626, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-08 02:04:07 +10:00', queued=0ms, exec=9295ms

* openstack-cinder-volume-podman-0_monitor_60000 onmelbourne03-overcloud-controller-2 'not running' (7): call=115, status='complete', exitreason='', last-rc-change='2022-12-08 02:19:07 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[heat-admin@melbourne01bng03-overcloud-controller-2 ~]$ sudo pcs status

Cluster name: tripleo_cluster

Cluster Summary:

* Stack: corosync

* Current DC:melbourne02-overcloud-controller-0 (version 2.0.3-5.el8_2.3-4b1f869f0f) - partition with quorum

* Last updated: Thu Jul 8 02:19:21 2022

* Last change: Thu Jul 8 02:19:03 2022 by root via cibadmin onmelbourne03-overcloud-controller-2

* 12 nodes configured

* 35 resource instances configured

Node List:

* Online: [melbourne02-overcloud-controller-0melbourne03-overcloud-controller-2melbourne04-overcloud-controller-1 ]

* GuestOnline: [ galera-bundle-0@melbourne01bng04-overcloud-controller-1 galera-bundle-1@melbourne01bng03-overcloud-controller-2 galera-bundle-2@melbourne01bng02-overcloud-controller-0 rabbitmq-bundle-0@melbourne01bng03-overcloud-controller-2 rabbitmq-bundle-1@melbourne01bng04-overcloud-controller-1 rabbitmq-bundle-2@melbourne01bng02-overcloud-controller-0 redis-bundle-0@melbourne01bng04-overcloud-controller-1 redis-bundle-1@melbourne01bng03-overcloud-controller-2 redis-bundle-2@melbourne01bng02-overcloud-controller-0 ]

Full List of Resources:

* Container bundle set: galera-bundle [cluster.common.tag/openstack-mariadb:pcmklatest]:

* galera-bundle-0 (ocf::heartbeat:galera): Mastermelbourne04-overcloud-controller-1

* galera-bundle-1 (ocf::heartbeat:galera): Promotingmelbourne03-overcloud-controller-2

* galera-bundle-2 (ocf::heartbeat:galera): Mastermelbourne02-overcloud-controller-0

* Container bundle set: rabbitmq-bundle [cluster.common.tag/openstack-rabbitmq:pcmklatest]:

* rabbitmq-bundle-0 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne03-overcloud-controller-2

* rabbitmq-bundle-1 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne04-overcloud-controller-1

* rabbitmq-bundle-2 (ocf::heartbeat:rabbitmq-cluster): Startedmelbourne02-overcloud-controller-0

* Container bundle set: redis-bundle [cluster.common.tag/openstack-redis:pcmklatest]:

* redis-bundle-0 (ocf::heartbeat:redis): Mastermelbourne04-overcloud-controller-1

* redis-bundle-1 (ocf::heartbeat:redis): Slavemelbourne03-overcloud-controller-2

* redis-bundle-2 (ocf::heartbeat:redis): Slavemelbourne02-overcloud-controller-0

* ip-192.168.6.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.20.21.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* ip-172.16.20.254 (ocf::heartbeat:IPaddr2): Startedmelbourne02-overcloud-controller-0

* ip-172.16.20.120 (ocf::heartbeat:IPaddr2): Startedmelbourne03-overcloud-controller-2

* Container bundle set: haproxy-bundle [cluster.common.tag/openstack-haproxy:pcmklatest]:

* haproxy-bundle-podman-0 (ocf::heartbeat:podman): Startedmelbourne03-overcloud-controller-2

* haproxy-bundle-podman-1 (ocf::heartbeat:podman): Startedmelbourne02-overcloud-controller-0

* haproxy-bundle-podman-2 (ocf::heartbeat:podman): Startedmelbourne04-overcloud-controller-1

* Container bundle: openstack-cinder-volume [cluster.common.tag/openstack-cinder-volume:pcmklatest]:

* openstack-cinder-volume-podman-0 (ocf::heartbeat:podman): Startedmelbourne03-overcloud-controller-2

Failed Resource Actions:

* openstack-cinder-volume-podman-0_start_0 onmelbourne02-overcloud-controller-0 'error' (1): call=5420, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-07 02:12:33 +10:00', queued=0ms, exec=38732ms

* openstack-cinder-volume-podman-0_start_0 onmelbourne04-overcloud-controller-1 'error' (1): call=5626, status='complete', exitreason='Newly created podman container exited after start', last-rc-change='2022-12-08 02:04:07 +10:00', queued=0ms, exec=9295ms

* openstack-cinder-volume-podman-0_monitor_60000 onmelbourne03-overcloud-controller-2 'not running' (7): call=115, status='complete', exitreason='', last-rc-change='2022-12-08 02:19:07 +10:00', queued=0ms, exec=0ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

- Delete the virtual Controller for the baremetal node list

[stack@director ~(undercloud)]$ source ~/stackrc

stack baremetal node delete 7d48b6ac-5df0-46e2-8f23-f2a3b4c73d65

Deleted node 7d48b6ac-5df0-46e2-8f23-f2a3b4c73d65

- Post migration checks

(undercloud) [stack@director]$ NODE=melbourne01bng03-overcloud-controller-1.ctlplane

(undercloud) [stack@director ]$ source ~/stackrc

(undercloud) [stack@director]$ openstack server list –fit

openstack server list --fit

openstack baremetal node list --fit

openstack overcloud profiles list

ssh heat-admin@$NODE "sudo pcs status" 2> /dev/null;

ssh heat-admin@$NODE "sudo podman image list" 2> /dev/null;

ssh heat-admin@$NODE "sudo podman ps" 2> /dev/null;

ssh heat-admin@$NODE "sudo podman exec -ti clustercheck clustercheck" 2> /dev/null

ssh heat-admin@$NODE "df -h" 2> /dev/null

ssh heat-admin@$NODE "ls -la /var/lib/glance/images" 2> /dev/null

ssh heat-admin@$NODE "sudo dmesg|tail -20" 2> /dev/null

ssh heat-admin@$NODE "sudo journalctl -xe" 2> /dev/null

ssh heat-admin@$NODE "sudo podman exec \$(sudo podman ps -f name=rabbitmq-bundle -q) rabbitmqctl cluster_status" 2> /dev/null

ssh heat-admin@$NODE "sudo pcs cluster status" 2> /dev/null

- Overcloud

source ~/overcloudrc

openstack service list

openstack service show keystone

openstack service show neutron

openstack hypervisor list

openstack network list --long --fit

openstack image list --fit

openstack server list --fit --all-projects

openstack project list