Prometheus and Grafana are well-known open-source resources utilized for tracking and displaying data from a variety of systems and software. Using the steps in this blog, you can set up Prometheus and Grafana on Kubernetes cluster. These two are open-source tools for monitoring and visualizing metrics from different systems and applications.

In continuation of this blog in upcoming posts, we will also examine Prometheus’s application for tracking containerized network functions (CNFs) on Kubernetes clusters for 5G networks, monitor variety of metrics , including radio performance, baremetal X86 ISS infrastructure, and networking equipments like routers and switches.

Here in my set up I have 3 worker nodes, 1 Master, 1 NFS server and 1 Ansible server. I am using Metal-LB load balancer to access the applications you can refer my blog on Metal-LB at https://bit.ly/3kx9Ixk. I have used Ansible to automate the deployment process of Kubernetes cluster. You can refer my blog for the deployment process of Kubernetes cluster using Ansible available at https://bit.ly/3ZbG5QY. Once the Kubernetes cluster is up and running, follow the steps below to deploy Prometheus and Grafana.

So folks,are you ready to revolutionize your monitoring and visualization game? so lets dive into the exciting world of Prometheus Grafana deployment on Kubernetes. Whether you’re a seasoned DevOps enthusiast or just getting started, the steps and playbooks available here will empower you to unleash the full potential of these powerful tools. Get ready for seamless scalability, robust data collection, and stunning visualizations that will take your monitoring efforts to new heights. So grab your virtual hard hat and let’s get deploying!

How Does Prometheus Work with Kubernetes?

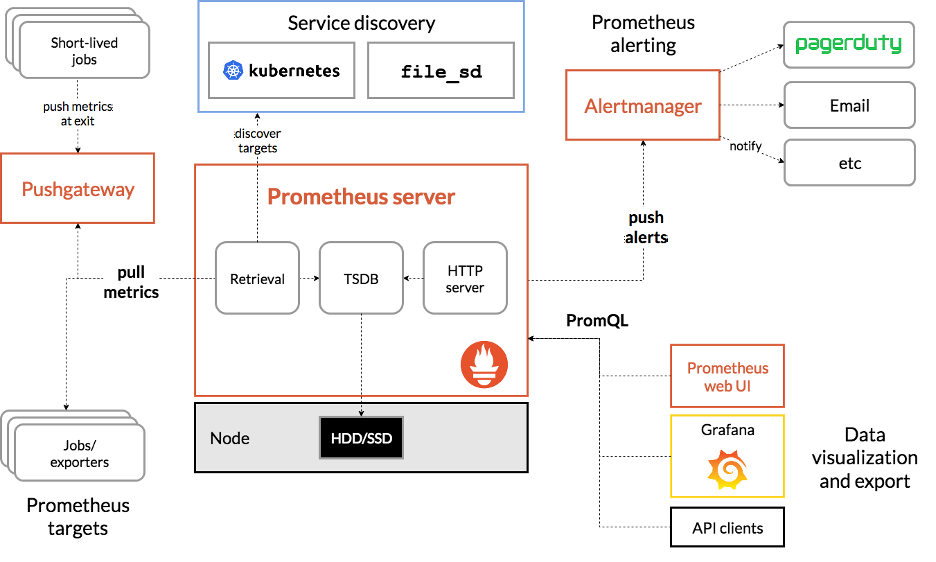

Prometheus follows a pull-based model where it scrapes metrics from configured targets at regular intervals. In a Kubernetes environment, these targets are typically pods or services that are labeled with specific metadata tags known as labels. These labels allow Prometheus to precisely target specific services or components within your application stack.

Diagram reference : prometheus.io

To facilitate this process, you will need to deploy an instance of the Prometheus server within your Kubernetes cluster. This can be achieved by deployment.

Here is my infrastructure’s server setup

All the manifest are available on my my Github account. Feel free to clone them and use them for your deployment.

https://github.com/ranjeetbadhe/prometheus.git

My nodes are

[root@master-cluster1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-cluster1.ranjeetbadhe.com Ready control-plane 47h v1.27.6

worker1-cluster1.ranjeetbadhe.com Ready <none> 47h v1.27.6

worker2-cluster1.ranjeetbadhe.com Ready <none> 47h v1.27.6

worker3-cluster1.ranjeetbadhe.com Ready <none> 47h v1.27.6

[root@master-cluster1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.100 master-cluster1 master-cluster1.ranjeetbadhe.com

192.168.0.101 worker1-cluster1 worker1-cluster1.ranjeetbadhe.com

192.168.0.102 worker2-cluster1 worker2-cluster1.ranjeetbadhe.com

192.168.0.103 worker3-cluster1 worker3-cluster1.ranjeetbadhe.com

192.168.0.55 nfs nfs.ranjeetbadhe.com

192.168.0.50 ansible ansible.ranjeetbadhe.com

Let us verify our NFS. access from Kubernetes cluster

To verify access from Kubernetes cluster to our NFS server, we will first need to create a PersistentVolumeClaim in the same namespace as Prometheus and Grafana. This can be done with the following command:

On NFS Server

[root@nfs ~]# showmount -e localhost

Export list for localhost:

/nfs-grafana-storage *

/nfs *

From Worker and Master node

[root@worker1-cluster1 ~]# showmount -e 192.168.0.55Export list for 192.168.0.55:/nfs-grafana-storage */nfs *

[root@worker2-cluster1 ~]# showmount -e 192.168.0.55Export list for 192.168.0.55:/nfs-grafana-storage */nfs * *

[root@worker2-cluster1 ~]# showmount -e 192.168.0.55Export list for 192.168.0.55:/nfs-grafana-storage */nfs * *

From Master node

[root@master-cluster1 ~]# showmount -e 192.168.0.55

Export list for 192.168.0.55:

/nfs-grafana-storage *

/nfs *

Here are my manifest files for Prometheus and Grafana.

[root@master-cluster1 Deploy-Prometheus-Grafana-on-Kubernetes]# ll-rw-r--r-- 1 root root 360 Oct 17 13:32 cluster-role.yaml-rw-r--r-- 1 root root 65 Oct 17 13:32 monitoring-namespace.yaml-rw-r--r-- 1 root root 618 Oct 17 13:32 prometheus-cluster-role.yaml-rw-r--r-- 1 root root 4518 Oct 17 13:32 prometheus-config.yaml-rw-r--r-- 1 root root 1203 Oct 17 19:06 prometheus-deployment.yaml-rw-r--r-- 1 root root 264 Oct 17 14:40 prometheus-pvc-nfs.yaml-rw-r--r-- 1 root root 302 Oct 17 14:40 prometheus-pv-nfs.yaml-rw-r--r-- 1 root root 333 Oct 17 13:32 prometheus-service.yaml

Namespace manifest

---apiVersion: v1kind: Namespacemetadata: name: monitoring

Create Namespace

[root@master-cluster1 ]# kubectl apply -f monitoring-namespace.yamlnamespace/monitoring created

[root@master-cluster1 ~]# kubectl get nsNAME STATUS AGEdefault Active 34hkube-flannel Active 34hkube-node-lease Active 34hkube-public Active 34hkube-system Active 34hmetallb-system Active 33hmonitoring Active 33h

Create Volumes and claims

[root@master-cluster1 ]# kubectl apply -f prometheus-pv-nfs.yamlpersistentvolume/pv-nfs-data created[root@master-cluster1 Deploy-Prometheus-Grafana-on-Kubernetes]# kubectl apply -f prometheus-pvc-nfs.yamlpersistentvolumeclaim/pvc-nfs-data created

Create Deployment

[root@master-cluster1 ]# kubectl apply -f prometheus-config.yamlconfigmap/prometheus-config created

Verify pods creation for Prometheus and Grafana

[root@master-cluster1 ~]# kubectl get pods -n monitoringNAME READY STATUS RESTARTS AGEgrafana-6d997767cd-jt5zz 1/1 Running 2 27hprometheus-749d6c7785-nrwl9 1/1 Running 2 28h

Create Service

---apiVersion: v1kind: Servicemetadata: name: prometheus-service namespace: monitoring annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9090'

spec: selector: app: prometheus type: LoadBalancer ports: - name: http port: 8080 targetPort: 9090

[root@master-cluster1]# kubectl apply -f prometheus-service.yaml

service/prometheus-service created

service/prometheus-service created[root@master-cluster1 ~]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-service LoadBalancer 10.101.39.230 192.168.0.110 8080:31105/TCP 44h

Let Us Deploy the Grafana. Here are my manifest files

[root@master-cluster1 Grafana]# ll-rw-r--r-- 1 root root 492 Oct 17 13:32 grafana-datasource-config.yaml-rw-r--r-- 1 root root 862 Oct 17 13:32 grafana-deployment.yaml-rw-r--r-- 1 root root 249 Oct 17 13:32 grafana-pvc-nfs.yaml-rw-r--r-- 1 root root 286 Oct 17 19:09 grafana-pv-nfs.yaml-rw-r--r-- 1 root root 289 Oct 17 19:20 grafana-service.yaml

Apply the mainfests file.

[root@master-cluster1 Grafana]# kubectl apply -f grafana-pv-nfs.yaml

persistentvolume/pv-nfs-storage created

[root@master-cluster1 Grafana]# kubectl apply -f grafana-pvc-nfs.yaml

persistentvolumeclaim/pvc-nfs-storage created

[root@master-cluster1 Grafana]# kubectl apply -f grafana-datasource-config.yaml

configmap/grafana-datasources created

[root@master-cluster1 Grafana]# kubectl apply -f grafana-service.yaml

service/grafana created

Verify Prometheus and Grafana Pods distribution across nodes.

[root@master-cluster1 ~]# kubectl get pods -n monitoring -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESgrafana-6d997767cd-jt5zz 1/1 Running 2 27h 10.244.2.10 worker2-cluster1.ranjeetbadhe.com prometheus-749d6c7785-nrwl9 1/1 Running 2 28h 10.244.3.13 worker3-cluster1.ranjeetbadhe.com

Verify the IP External IP address and GUI access

[root@master-cluster1 Grafana]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana LoadBalancer 10.102.180.170 192.168.0.111 3000:31708/TCP 15s

prometheus-service LoadBalancer 10.101.39.230 192.168.0.110 8080:31105/TCP 5h32m

[root@master-cluster1 ~]# curl http://192.168.0.110:8080Found.

[root@master-cluster1 ~]# curl http://192.168.0.111:8080Found.Dashboard

Prometheus Dashboard

Grafana Dashboard

Grafana 3662 template

That’s it for now folks. Thank you for reading my blog. I welcome your comments and feedback .