Recently I was working on a migration of Virtual openstack controller to baremetal node. The steps and procedures I have mentioned in my previous blog bit.ly/3XE5YJr . My migration failed and as I started log analysis to figure out the issue I found the following error.

“ keystoneauth1.exceptions.http.Unauthorized: The password is expired and needs to be changed for user:”

On digging further this issue we found that nodes were out of sync as the NTP source was unreachable and the passwords were creating during deployment that were already expired.

[root@overcloud-controller-0 ~]# podman exec -it $(podman ps --filter name=galera -q) mysql -u root keystone -e 'select created_at,expires_at from password where expires_at IS NOT NULL;' +---------------------+---------------------+ | created_at | expires_at | +---------------------+---------------------+ | 2023-01-03 12:23:40 | 2023-01-05 01:41:52 | | 2023-06-25 01:15:25 | 2023-07-01 00:21:21 | | 2023-06-25 01:17:06 | 2023-07-01 00:22:59 | | 2023-06-25 01:17:06 | 2023-07-01 00:23:01 | | 2023-06-25 01:17:08 | 2023-07-01 00:23:02 | | 2023-06-25 01:17:09 | 2023-07-01 00:23:02 | | 2023-06-25 01:17:10 | 2023-07-01 00:23:03 | | 2023-06-25 01:17:10 | 2023-07-01 00:23:04 | | 2023-06-25 01:17:11 | 2023-07-01 00:23:05 | | 2023-06-25 01:17:13 | 2023-07-01 00:23:06 | | 2023-06-25 01:17:13 | 2023-07-01 00:23:07 | | 2023-06-25 01:17:13 | 2023-07-01 00:23:07 | | 2023-06-25 01:17:19 | 2023-07-01 00:23:12 | +---------------------+---------------------+ [root@overcloud-controller-0 ~]# chronyc sources 210 Number of sources = 4 MS Name/IP address Stratum Poll Reach LastRx Last sample ====================================================================

We tested the NTP reachability, found it unreachable, fixed the routing issue, reset the password, ran the cloud update script and this fixed our issue. As I was working on this troubleshooting , I realized OpenStack services are dependent on accurate time keeping, and its vital to configure nodes/VNF/Hosts/Applications to receive time from the data centres dedicated time servers seamlessly. Not to forget that Service containers running on RHOSP extracts time from the host it resides.

[root@overcloud-controller-0 ~]# chronyc sources MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^? 152.70.69.232 2 6 1 1 -2365us[-2365us] +/- 159ms ^? 165.22.211.200 3 6 1 2 -7943us[-7943us] +/- 112ms ^? 121.200.9.38 3 6 1 2 -17ms[ -17ms] +/- 71ms ^? 172.20.10.22 2 6 1 2 -8868us[-8868us] +/- 75ms

To enable Chronyc only couple of steps are required on time server and include it in your network config YAML files.

[root@ overcloud-controller-0 ~]# egrep allow /etc/chrony.conf allow 192.168.0.0/24 allow [root@ overcloud-controller-0 ~]# systemctl restart chronyd.service

This incident motivated me to write this blog on the importance of time synchronization, design and implementation strategy in a Telco data centre hosting LTE-4g, 5G GC and RAN/ORAN. I am covering this topic in 4 parts; this episode is about clock distribution.

We will explore Red Hat RHOSP and OpenShift PTP implementation for Telco cloud using Time-Master. Discuss new approaches for time synchronization based on Precision Time Protocol (PTP), 5G requirement of precision time distribution due to TDD spectrum’s and much more.

Network synchronization means the alignment of the frequency, phase and/or time clocks of all the nodes whether its EPC, IMS RAN ,by distributing a common frequency, phase or time reference.

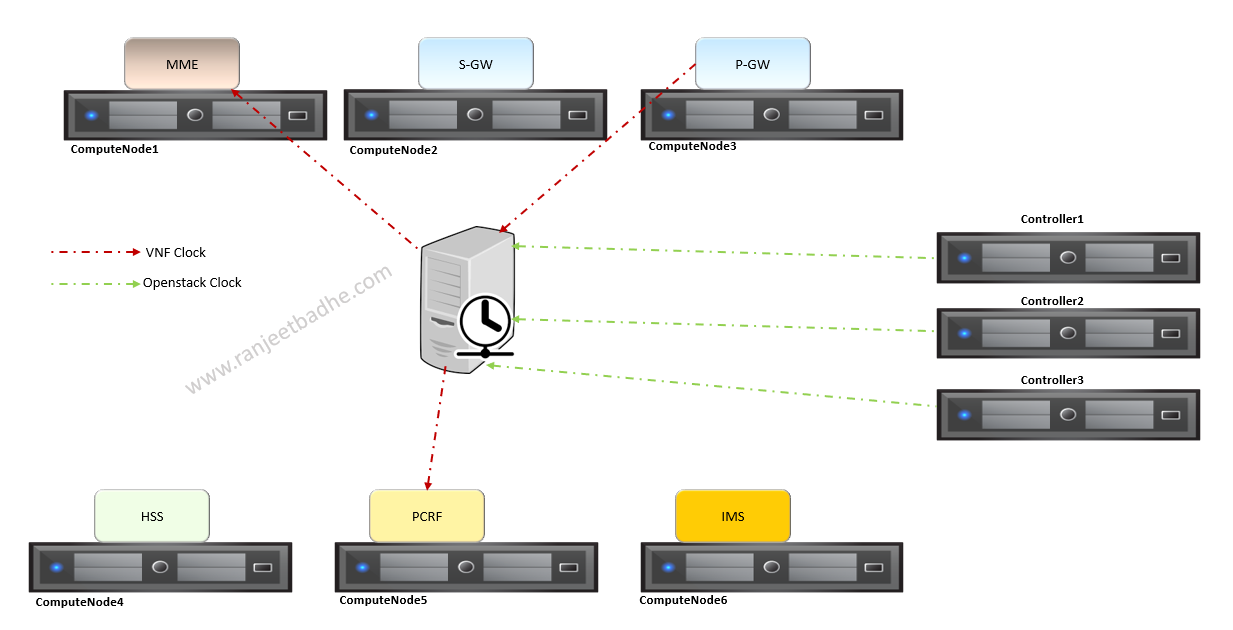

Poor synchronization impacts the quality of service delivered over transport, switching, mobile and signalling networks. As depicted in the picture EPC components , RHOSP computes and controller nodes all are clocked to common time source.

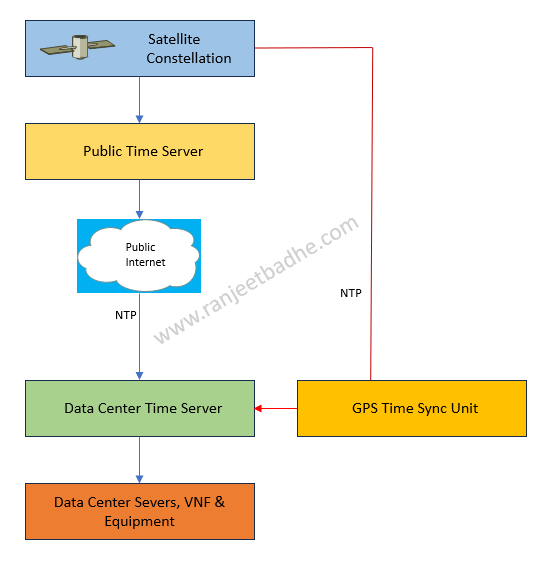

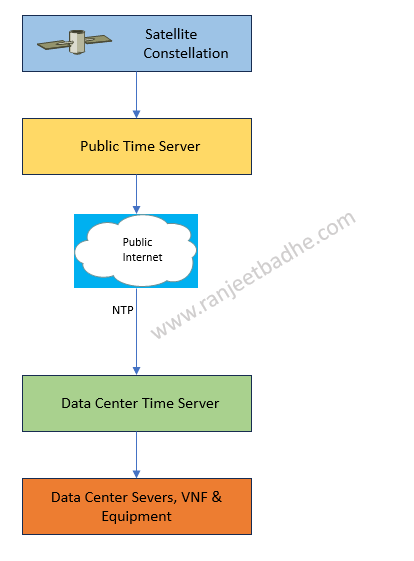

Lets find how the clock sourcing is done to the servers and telecom equipment’s in Data Center.

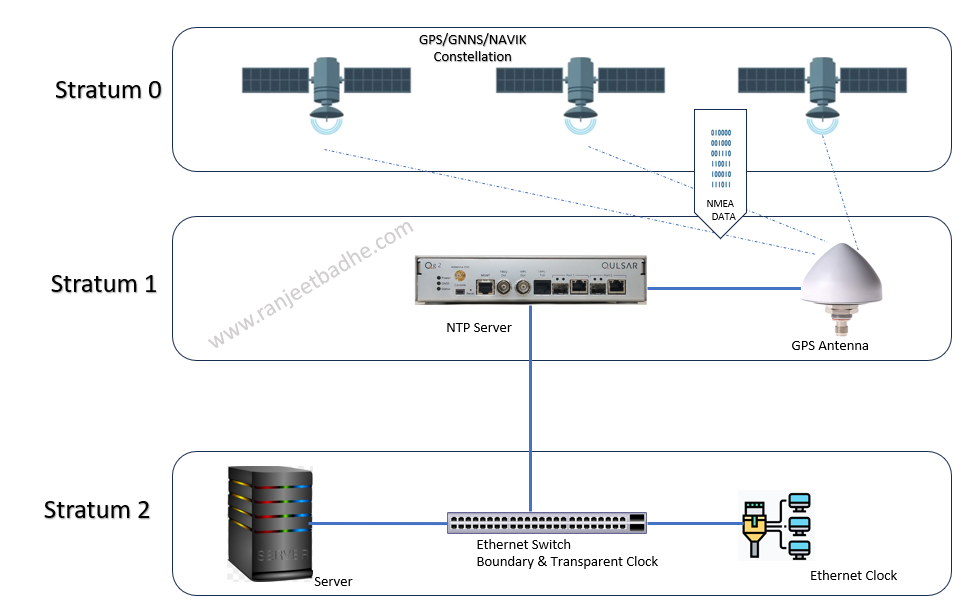

Stratum 1 clock sourcing (Clock from the heaven)

Timing from atomic clocks is now an integral part of data-center operations. The atomic clock time transmitted via Global Position System (GPS) and other Global Navigation Satellite System (GNSS) or a Indian NAVIK system is synchronizing data centers across the geography, and individual atomic clocks are deployed in individual telco data centres

The reference clock is the device that sits at top the stratum hierarchy reference clocks are known as stratum 0 servers. They distribute Coordinated Universal Time

Stratum 2 Hierarchy

Here we allocate a dedicated resource in data center, and connect the clients to that resource

I conclude this part with my comments and observations

With the second option there are reliability, accuracy and security risks .Reliability risks like availability of the Public NTP server and the accuracy of the server. In case the public NTP server is overwhelmed by the clients the accuracy may degrade

Accuracy risks , is all about precision and correctness of the source server. Congestion in LAN or WAN and Asymmetric delays affects the synchronization

As the NTP works on port 123 it is necessary for firewall to open . Also there is no NTP authentication for any validation with the server. Clearly security features are lagging.

It is good to have multiple NTP servers at least 4 , keep the stratum architecture consistent when sourcing from Public NTP server. Implementation of GPS receivers for the reliability and the accuracy for homogeneous synchronization is always a better option.