In my previous blog ,we saw the deployment of Kubernetes cluster using Ansible playbooks. I have used same playbooks to create a new Kubernetes cluster for demonstrating the MetalLB load balancer.

MetalLB is a very popular, free and open-source load balancer. I have deployed it as a component in 5g core networks. MetalLB create Kubernetes services of type LoadBalancer, and provides network load-balancer implementation in on-premise clusters (Baremetal deployments), not part of cloud provider like google/AWS/Azure. MetalLB works in 2 modes, Layer 2 (ARP/NDP) and BGP to announce service IP addresses. In this blog we will explore the Layer-2 mode deployment.

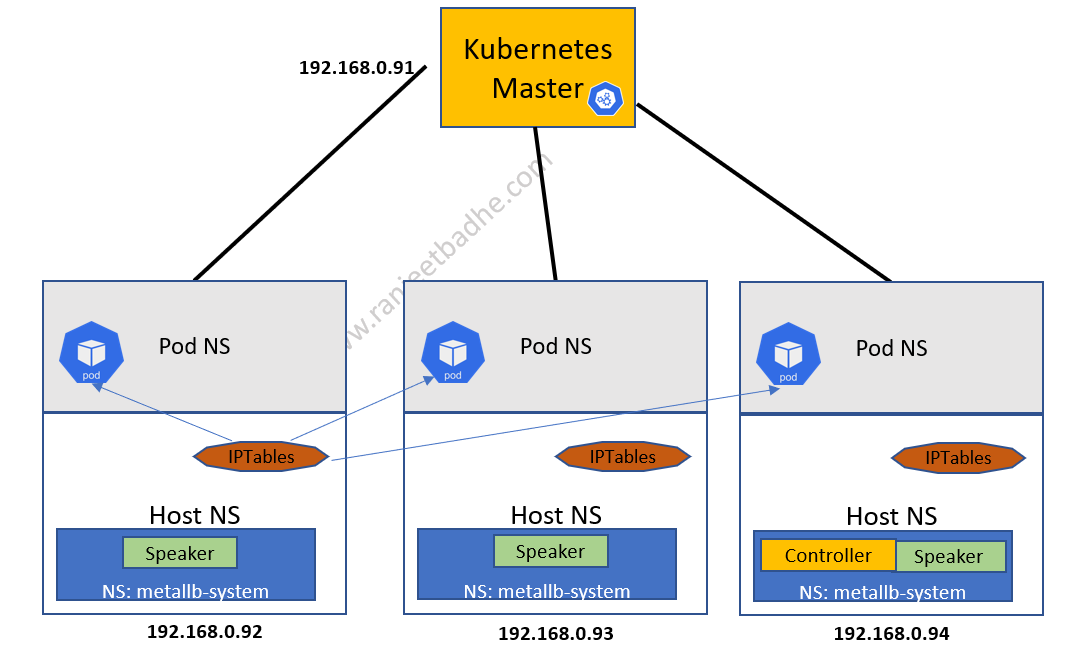

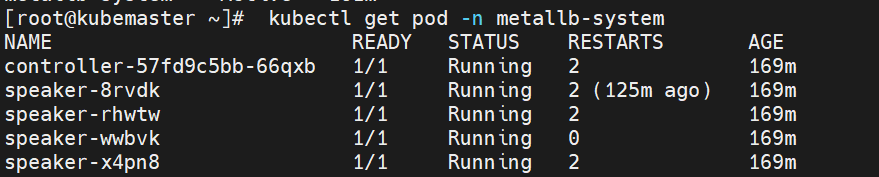

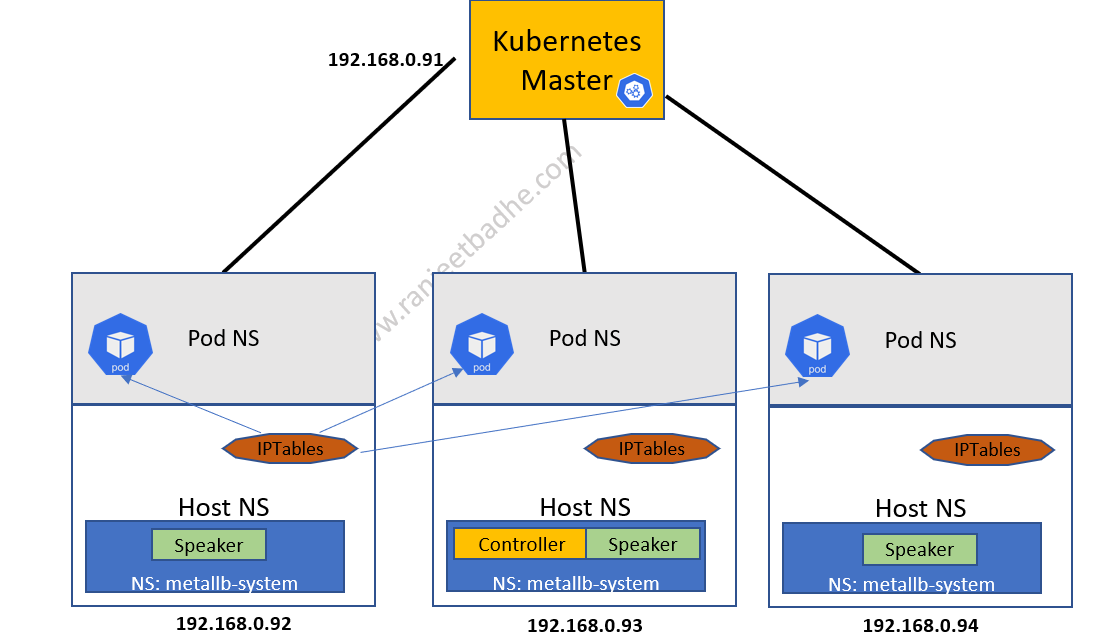

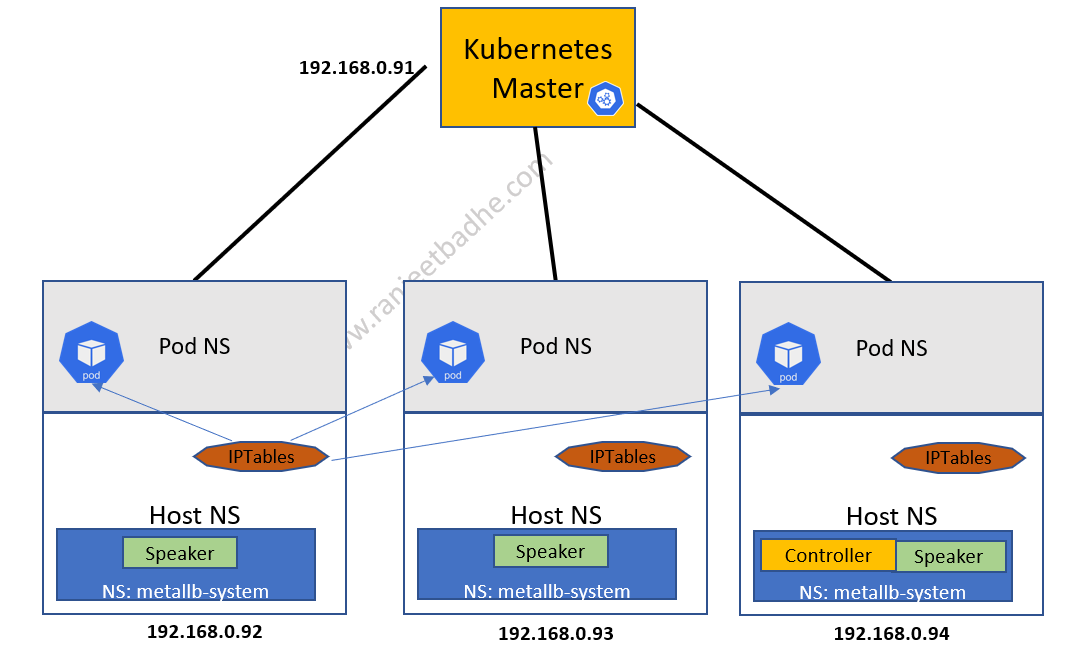

MetalLB has two components Controller and Speaker containerized into pods. Controller is responsible for creation of the load balancer services and it allocates the IP addresses. Speaker Manages the advertisement of the IP address associated with them. The speaker daemon set runs a pod on every node. We will talk more on this in latter section.

[root@kubemaster ~]# kubectl get pod -n metallb-system NAME READY STATUS RESTARTS AGE controller-57fd9c5bb-66qxb 1/1 Running 2 169m speaker-8rvdk 1/1 Running 2 (125m ago) 169m speaker-rhwtw 1/1 Running 2 169m speaker-wwbvk 1/1 Running 0 169m speaker-x4pn8 1/1 Running 2 169m

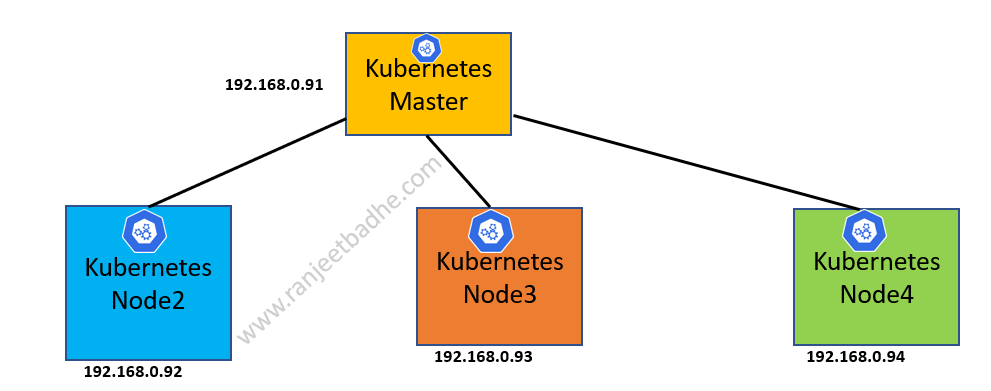

In my setup I have 3 worker nodes and 1 master node.

[root@kubemaster ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kubemaster.ranjeetbadhe.com Ready control-plane,master 14h v1.23.2 node2.ranjeetbadhe.com Ready <none> 14h v1.23.2 node3.ranjeetbadhe.com Ready <none> 14h v1.23.2 node4.ranjeetbadhe.com Ready <none> 14h v1.23.2

[root@kubemaster ~]# kubectl get pods No resources found in default namespace.

Let us apply manifest namespace.yaml. The contents are pasted below.

[root@kubemaster ~]# cat namespace.yaml apiVersion: v1 kind: Namespace metadata: name: metallb-system labels: app: metallb

[root@kubemaster ~]# kubectl apply -f namespace.yaml namespace/metallb-system created

Manifest metallb.yaml which creates speakers and controller and other entities.

I have pasted this manifest at the end since it’s a bigger. Once you apply this manifest you will see the output similar to below.

[root@kubemaster ~]# kubectl apply -f metallb.yaml Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable i n v1.25+ podsecuritypolicy.policy/controller configured podsecuritypolicy.policy/speaker configured serviceaccount/controller created serviceaccount/speaker created clusterrole.rbac.authorization.k8s.io/metallb-system:controller unchanged clusterrole.rbac.authorization.k8s.io/metallb-system:speaker unchanged role.rbac.authorization.k8s.io/config-watcher created role.rbac.authorization.k8s.io/pod-lister created role.rbac.authorization.k8s.io/controller created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller unchanged clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker unchanged rolebinding.rbac.authorization.k8s.io/config-watcher created rolebinding.rbac.authorization.k8s.io/pod-lister created rolebinding.rbac.authorization.k8s.io/controller created daemonset.apps/speaker created deployment.apps/controller created [root@kubemaster ~]# kubectl get ns NAME STATUS AGE default Active 14h kube-flannel Active 14h kube-node-lease Active 14h kube-public Active 14h kube-system Active 14h metallb-system Active 43s

In the manifest metallb-config.yaml ensure that you pickup the ip range which is relevant to your infrastructure.

[root@kubemaster ~]# cat metallb-config.yml apiVersion: v1 kind: ConfigMap metadata: namespace: metallb-system name: config data: config: | address-pools: - name: default protocol: layer2 addresses: - 192.168.0.120-192.168.0.125

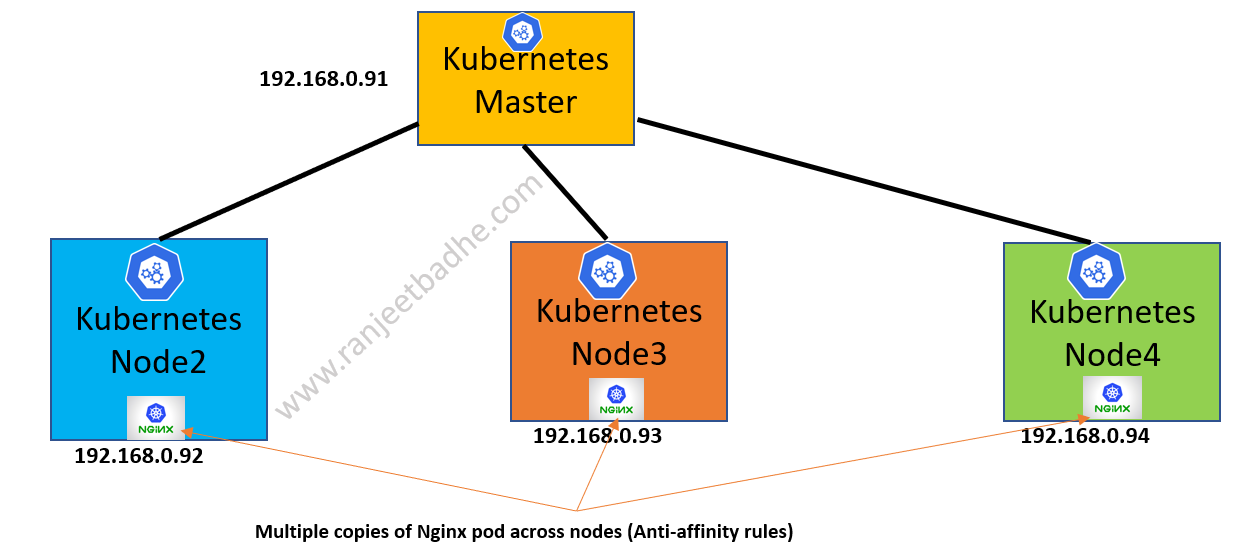

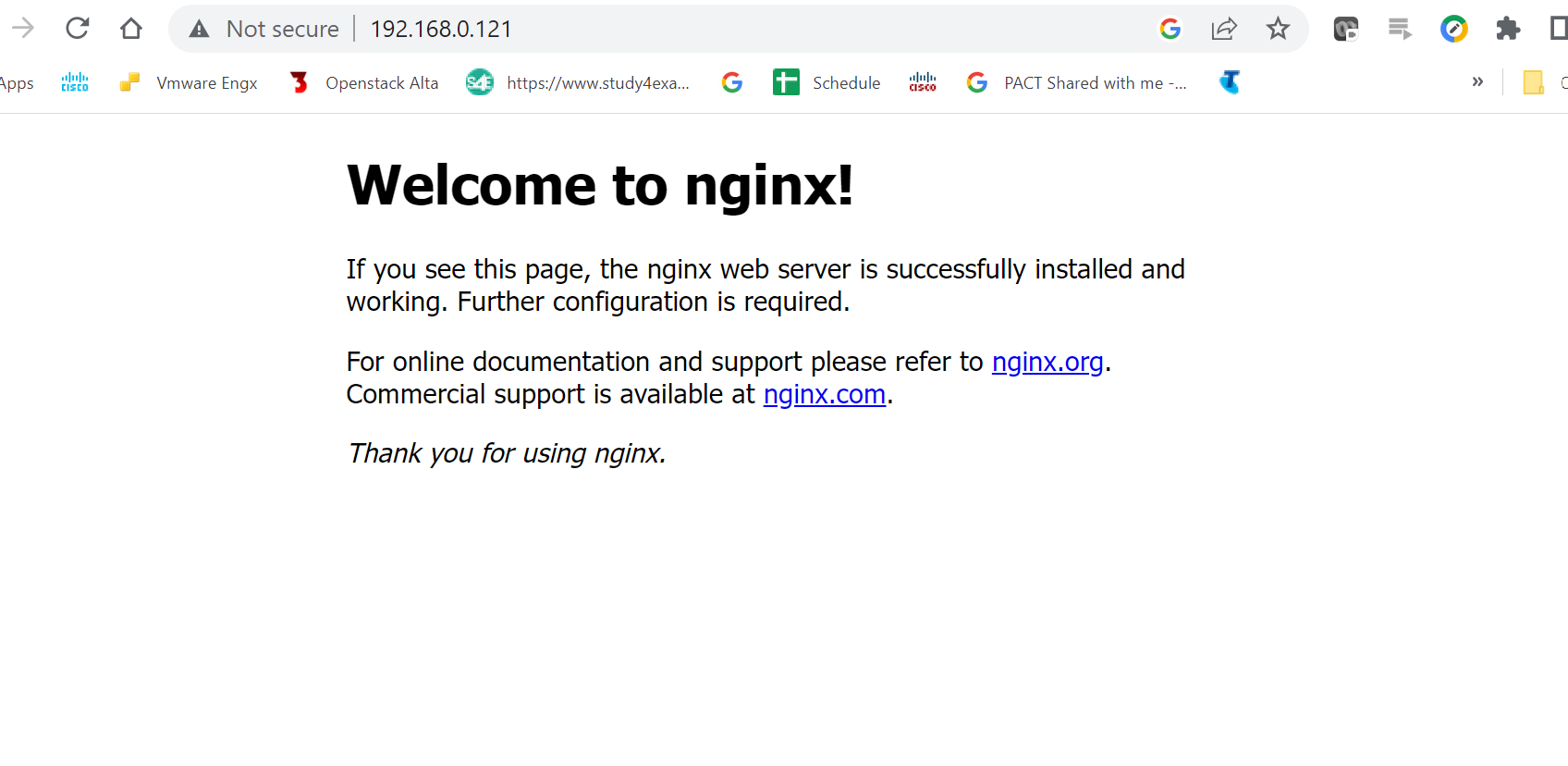

Next comes the manifest anti-affinity-pods.yml. This manifest will deploy 3 Nginx pods on 3 nodes. Nginx continues to serve web page even the 2 nodes are made offline.

[root@kubemaster ~]# cat anti-affinity-pods.yml apiVersion: apps/v1 # for versions before 1.9.0 use apps/v1beta2 kind: Deployment metadata: name: nginx-deployment spec: selector: matchLabels: app: nginx replicas: 3 template: metadata: labels: app: nginx spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - nginx topologyKey: "kubernetes.io/hostname" containers: - name: nginx image: nginx:1.7.9 ports: - containerPort: 80

[root@kubemaster ~]# kubectl create -f metallb-config.yml configmap/config created

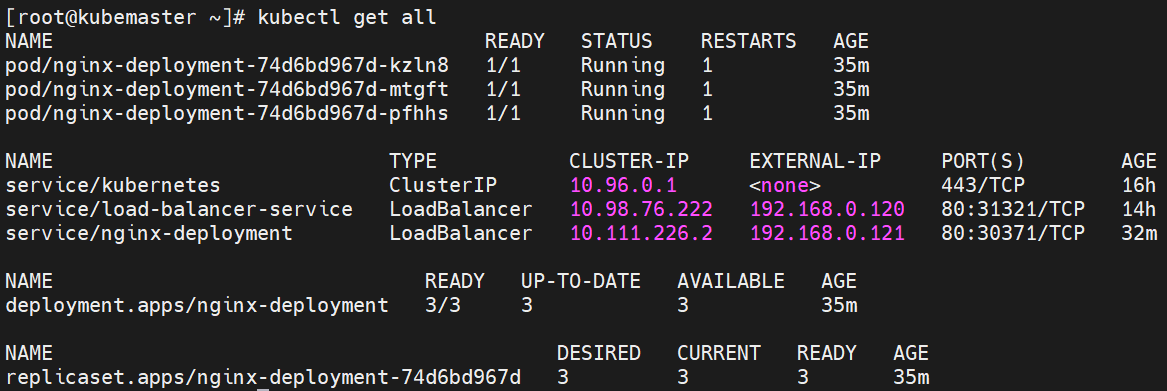

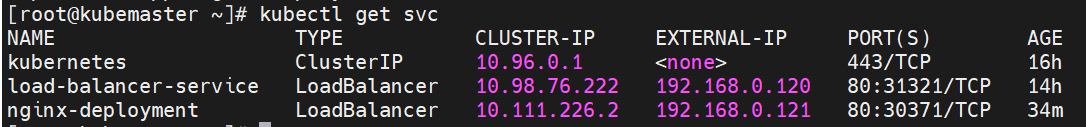

[root@kubemaster ~]# kubectl expose deploy nginx-deployment --port 80 --type LoadBalancer service/nginx-deployment exposed

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-7c658794b9 1 1 1 6s

[root@kubemaster ~]# kubectl expose deploy nginx --port 80 --type LoadBalancer service/nginx exposed

[root@kubemaster ~]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-74d6bd967d 3 3 3 83m

[root@kubemaster ~]# kubectl describe rs/nginx-deployment-74d6bd967d Name: nginx-deployment-74d6bd967d Namespace: default Selector: app=nginx,pod-template-hash=74d6bd967d Labels: app=nginx pod-template-hash=74d6bd967d Annotations: deployment.kubernetes.io/desired-replicas: 3 deployment.kubernetes.io/max-replicas: 4 deployment.kubernetes.io/revision: 1 Controlled By: Deployment/nginx-deployment Replicas: 3 current / 3 desired Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: app=nginx pod-template-hash=74d6bd967d Containers: nginx: Image: nginx:1.7.9 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Events: <none>

[root@kubemaster ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kubemaster.ranjeetbadhe.com Ready control-plane,master 14h v1.23.2 node2.ranjeetbadhe.com Ready <none> 14h v1.23.2 node3.ranjeetbadhe.com Ready <none> 14h v1.23.2 node4.ranjeetbadhe.com Ready <none> 14h v1.23.2 kubectl get pods -n default -o wide --field-selector spec.nodeName=node3.ranjeetbadhe.com kubectl get pods -n default -o wide --field-selector spec.nodeName=node4.ranjeetbadhe.com kubectl get pods -n default -o wide --field-selector spec.nodeName=node2.ranjeetbadhe.com

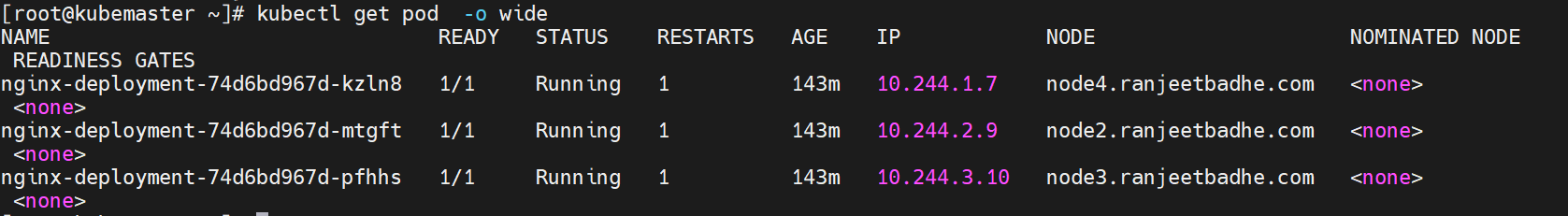

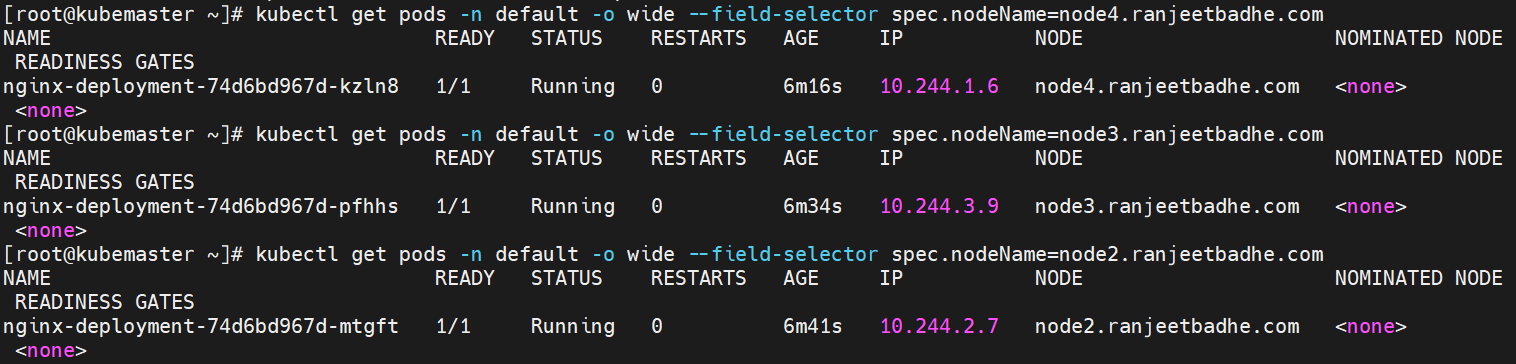

Verify the pods are running on all the nodes as we set anti-affinity rules.

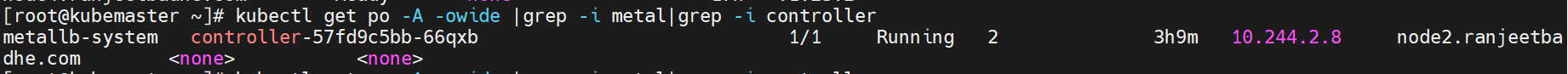

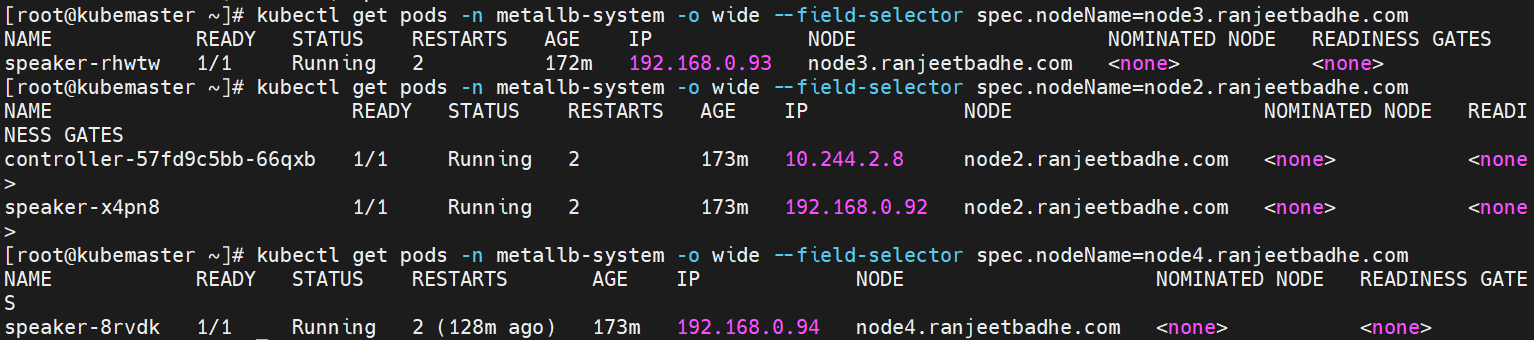

One of the speaker pods is elected as a leader, and the leader uses ARP (GARP, Gratuitous APR) to advertise the service External IP. One of the node becomes controller, in our case node2 is the controller.

[root@kubemaster ~]# kubectl get po -A -o wide |grep -i metal|grep -i controller metallb-system controller-57fd9c5bb-66qxb 1/1 Running 2 3h9m 10.244.2.8 node2.ranjeetbadhe.com <none> <none>

[root@kubemaster ~]# kubectl get po -A -o wide |grep -i metal|grep -i speaker metallb-system speaker-8rvdk 1/1 Running 2 (146m ago) 3h10m 192.168.0.94 node4.ranjeetbadhe.com <none> <none> metallb-system speaker-rhwtw 1/1 Running 2 3h10m 192.168.0.93 node3.ranjeetbadhe.com <none> <none> metallb-system speaker-wwbvk 1/1 Running 0 3h10m 192.168.0.91 kubemaster.ranjeetbadhe.com <none> <none> metallb-system speaker-x4pn8 1/1 Running 2 3h10m 192.168.0.92 node2.ranjeetbadhe.com <none> <none>

Controller is running on node2. I shutdown this node and I observe node3 becomes the controller and there is reelection of the speaker. This is depicted in the below command output.

[root@kubemaster ~]# ssh root@node2.ranjeetbadhe.com shutdown -h now root@node2.ranjeetbadhe.com's password: Connection to node2.ranjeetbadhe.com closed by remote host.

[root@kubemaster ~]# kubectl get po -A -owide |grep -i metal|grep -i controller metallb-system controller-57fd9c5bb-w2mjk 1/1 Running 0 74s 10.244.3.11 node3.ranjeetbadhe.com <none> <none>

That’s all folks, Thank you for reading my blog post. In case of any questions/discussion , feel free to reach out .